More details have emerged about how Facebook data on millions of US voters was handled after it was obtained in 2014 by UK political consultancy Cambridge Analytica for building psychographic profiles of Americans to target election messages for the Trump campaign.

The dataset — of more than 50M Facebook users — is at the center of a scandal that’s been engulfing the social network giant since newspaper revelations published on March 17 dropped privacy and data protection into the top of the news agenda.

A UK parliamentary committee has published a cache of documents provided to it by an ex CA employee, Chris Wylie, who gave public testimony in front of the committee at an oral hearing earlier this week. During that hearing he said he believes data on “substantially” more than 50M Facebookers was obtained by CA. Facebook has not commented publicly on that claim.

Among the documents the committee has published today (with some redactions) is the data-licensing contract between Global Science Research (GSR) — the company set up by the Cambridge University professor, Aleksandr Kogan, whose personality test app was used by CA as the vehicle for gathering Facebook users’ data — and SCL Elections (an affiliate of CA), dated June 4, 2014.

The document is signed by Kogan and CA’s now suspended CEO, Alexander Nix.

The contract stipulates that all monies transferred to GSR will be used for obtaining and processing the data for the project — “to further develop, add to, refine and supplement GS psychometric scoring algorithms, databases and scores” — and none of the money paid Kogan should be spent on other business purposes, such as salaries or office space “unless otherwise approved by SCL”.

Wylie told the committee on Tuesday that CA chose to work with Kogan as he had agreed to work with them on acquiring and modeling the data first, without fixing commercial terms up front.

The contact also stipulates that Kogan’s company must gain “advanced written approval” from SCL to cover costs not associated with collecting the data — including “IT security”.

Which does rather underline CA’s priorities in this project: Obtain, as fast as possible, lots of personal data on US voters, but don’t worry much about keeping that personal information safe. Security is a backburner consideration in this contract.

CA responded to Wylie’s testimony on Tuesday with a statement rejecting his allegations — including claiming it “does not hold any GSR data or any data derived from GSR data”.

The company has not updated its press page with any new statement in light of the publication of a 2014 contract signed by its former CEO and GSR’s Kogan.

Earlier this week the committee confirmed that Nix has accepted its summons to return to give further evidence — saying the public session will likely to take place on April 17.

Voter modeling across 11 US States

The first section of the contract between the CA affiliate company and GSR briefly describes the purpose of the project as being to conduct “political modeling” of the population in 11 US states.

On the data protection front, the contract includes a clause stating that both parties “warrant and undertake” to comply with all relevant privacy and data handling laws.

“Each of the parties warrants and undertakes that it will not knowingly do anything or permit anything to be done which might lead to a breach of any such legislation, regulations and/or directives by the other party,” it also states.

CA remains under investigation by the UK’s data protection watchdog, which obtained a warrant to enter its offices last week — and spent several hours gathering evidence. The company’s activities are being looked at as part of a wider investigation by the ICO into the use of data analytics for political purposes.

Commissioner Elizabeth Denham has previously said she’s leading towards recommending a code of conduct for use of social media for political campaigning — and said she hopes to publish her report by May.

Another clause in the contract between GSR and SCL specifies that Kogan’s company will “seek out informed consent of the seed user engaging with GS Technology” — which would presumably refer to the ~270,000 people who agreed to take the personality quiz in the app deployed via Facebook’s platform.

Upon completion of the project, the contract specifies that Kogan’s company may continue to make use of SCL data for “academic research where no financial gain is made”.

Another clause details an additional research boon that would be triggered if Kogan was able to meet performance targets and deliver SCL with 2.1M matched records in the 11 US states it was targeting — so long as he met its minimum quality standards and at an averaged cost of $0.50 or less per matched record. In that event, he stood to also receive an SCL dataset of around 1M residents of Trinidad and Tobago — also “for use in academic research”.

The second section of the contract explains the project and its specification in detail.

Here it states that the aim of the project is “to infer psychological profiles”, using self-reported personality test data, political party preference and “moral value data”.

The 11 US states targeted by the project are also named as: Arkansas, Colorado, Florida, Iowa, Louisiana, Nevada, New Hampshire, North Carolina, Oregon, South Carolina and West Virginia.

The project is detailed in the contract as a seven step process — with Kogan’s company, GSR, generating an initial seed sample (though it does not specify how large this is here) using “online panels”; analyzing this seed training data using its own “psychometric inventories” to try to determine personality categories; the next step is Kogan’s personality quiz app being deployed on Facebook to gather the full dataset from respondents and also to scrape a subset of data from their Facebook friends (here it notes: “upon consent of the respondent, the GS Technology scrapes and retains the respondent’s Facebook profile and a quantity of data on that respondent’s Facebook friends”); step 4 involves the psychometric data from the seed sample, plus the Facebook profile data and friend data all being run through proprietary modeling algorithms — which the contract specifies are based on using Facebook likes to predict personality scores, with the stated aim of predicting the “psychological, dispositional and/or attitudinal facets of each Facebook record”; this then generates a series of scores per Facebook profile; step 6 is to match these psychometrically scored profiles with voter record data held by SCL — with the goal of matching (and thus scoring) at least 2M voter records for targeting voters across the 11 states; the final step is for matched records to be returned to SCL, which would then be in a position to craft messages to voters based on their modeled psychometric scores.

The “ultimate aim” of the psychometric profiling product Kogan built off of the training and Facebook data sets is imagined as “a ‘gold standard’ of understanding personality from Facebook profile information, much like charting a course to sail”.

The possibility for errors is noted briefly in the document but it adds: “Sampling in this phase [phase 1 training set] will be repeated until assumptions and distributions are met.”

In a later section, on demographic distribution analysis, the contract mentions the possibility for additional “targeted data collection procedures through multiple platforms” to be used — even including “brief phone scripts with single-trait questions” — in order to correct any skews that might be found once the Facebook data is matched with voter databases in each state, (and assuming any “data gaps” could not be “filled in from targeted online samples”, as it also puts it).

In a section on “background and rational”, the contract states that Kogan’s models have been “validity tested” on users who were not part of the training sample, and further claims: “Trait predictions based on Facebook likes are at near test-rest levels and have been compared to the predictions their romantic partners, family members, and friends make about their traits”.

“In all the previous cases, the computer-generated scores performed the best. Thus, the computer-generated scores can be more accurate than even the knowledge of very close friends and family members,” it adds.

His technology is described as “different from most social research measurement instruments” in that it is not solely based on self-reported data — with the follow-on claim being made that: “Using observed data from Facebook users’ profiles makes GS’ measurements genuinely behavioral.”

That suggestion, at least, seems fairly tenuous — given that a portion of Facebook users are undoubtedly aware that the site is tracking their activity when they use it, which in turn is likely to affect how they use Facebook.

So the idea that Facebook usage is a 100% naked reflection of personality deserves far more critical questioning than Kogan’s description of it in the contract with SCL.

And, indeed, some of the commentary around this news story has queried the value of the entire exposé by suggesting CA’s psychometric targeting wasn’t very effective — ergo, it may not have had a significant impact on the US election.

In contrast to claims being made for his technology in the 2014 contract, Kogan himself claimed in a TV interview earlier this month (after the scandal broke) that his predictive modeling was not very accurate at an individual level — suggesting it would only be useful in aggregate to, for example, “understand the personality of New Yorkers”.

Yesterday Channel 4 News reported that it had been able to obtain some of the data Kogan modeled for CA — supporting Wylie’s testimony that CA had not locked down access to the data.

In its report, the broadcaster spoke to some of the named US voters in Colorado — showing them the scores Kogan’s models had given them. Unsurprisingly, not all their interviewees thought the scores were an accurate reflection of who they were.

However regardless of how effective (or not) Kogan’s methods were, the bald fact that personal information on 50M+ Facebook users was so easily sucked out of the platform is of unquestionable public interest and concern.

The added fact this data set was used for psychological modeling for political message targeting purposes without people’s knowledge or consent just further underlines the controversy. Whether the political microtargeting method worked well or was hit and miss is really by the by.

In the contract, Kogan’s psychological profiling methods are described as “less costly, more detailed, and more quickly collected” than other individual profiling methods, such as “standard political polling or phone samples”.

The contract also flags up how the window of opportunity for his approach was closing — at least on Facebook’s platform. “GS’s method relies on a pre-existing application functioning under Facebook’s old terms of service,” it observes. “New applications are not able to access friend networks and no other psychometric profiling applications exist under the old Facebook terms.”

As I wrote last weekend, Facebook faced a legal challenge to the lax system of app permissions it operated in 2011. And after a data protection audit and re-audit by the Irish Data Protection Commissioner, in 2011 and 2012, the regulator recommended it shutter developers’ access to friend networks — which Facebook finally did (for both old and new apps) as of mid 2015.

But in mid 2014 existing developers on its platform could still access the data — as Kogan was able to, handing it off to SCL and its affiliates.

Other documents published by the committee today include a contract between Aggregate IQ — a Canadian data company which Wylie described to the committee as ‘CA Canada’ (aka yet another affiliate of CA/SCL, and SCL Elections).

This contract, which is dated September 15, 2014, is for the: “Design and development of an Engagement Platform System”, also referred to as “the Ripon Platform”, and described as: “A scalable engagement platform that leverages the strength of SCLs modelling data, providing an actionable toolset and dashboard interface for the target campaigns in the 2014 election cycle. This will consist of a bespoke engagement platform (SCL Engage) to help make SCLs behavioural microtargeting data actionable while making campaigns more accountable to donors and supporter”.

Another contract between Aggregate IQ and SCL is dated November 25, 2013, and covers the delivery of a CRM system, a website and “the acquisition of online data” for a political party in Trinidad and Tobago. In this contract a section on “behavioral data acquisition” details their intentions thus:

-

Identify and obtain qualified sources of data that illustrate user behaviour and contribute to the development of psychographic profiling in the region

-

This data may include, but is not limited to:

-

Internet Service Provider (ISP) log files

-

First party data logs

-

Third party data logs

-

Ad network data

-

Social bookmarking

-

Social media sharing (Twitter, FB, MySpace)

-

Natural Language Processing (NLP) of URL text and images

-

Reconciliation of IP and User-Agent to home address, census tract, or dissemination area

In his evidence to the committee on Tuesday Wylie described the AIQ Trinidad project as a “pre-cursor to the Rippon project to see how much data could be pulled and could we profile different attributes in people”.

He also alleged AIQ has used hacker type techniques to obtain data. “AIQ’s role was to go and find data,” he told the committee. “The contracting is pulling ISP data and there’s also emails that I’ve passed on to the committee where AIQ is working with SCL to find ways to pull and then de-anonymize ISP data. So, like, raw browsing data.”

Another document in the bundle published today details a project pitch by SCL to carry out $200,000 worth of microtargeting and political campaign work for the conservative organization ForAmerica.org — for “audience building and supporter mobilization campaigns”.

There is also an internal SCL email chain regarding a political targeting project that also appears to involve the Kogan modeled Facebook data, which is referred to as the “Bolton project” (which seems to refer to work done for the now US national security advisor, John Bolton) — with some back and forth over concerns about delays and problems with data matching in some of the US states and overall data quality.

“Need to present the little information we have on the 6,000 seeders to [sic] we have to give a rough and ready and very preliminary reading on that sample ([name redacted] will have to ensure the appropriate disclaimers are in place to manage their expectations and the likelihood that the results will change once more data is received). We need to keep the client happy,” is one of the suggested next steps in an email written by an unidentified SCL staffer working on the Bolton project.

“The Ambassador’s team made it clear that he would want some kind of response on the last round of foreign policy questions. Though not ideal, we will simply piss off a man who is potentially an even bigger client if we remain silent on this because it has been clear to us this is something he is particularly interested in,” the emailer also writes.

“At this juncture, we unfortunately don’t have the luxury of only providing the perfect data set but must deliver something which shows the validity of what we have been promising we can do,” the emailer adds.

Another document is a confidential memorandum prepared for Rebekah Mercer (the daughter of US billionaire Robert Mercer; Wylie has said Mercer provided the funding to set up CA), former Trump advisor Steve Bannon and the (now suspended) CA CEO Alexander Nix advising them on the legality of a foreign corporation (i.e. CA), and foreign nationals (such as Nix and others), carrying out work on US political campaigns.

This memo also details the legal structure of SCL and CA — the former being described as a “minority owner” of CA. It notes:

With this background we must look first at Cambridge Analytica, LLC (“Cambridge”) and then at the people involved and the contemplated tasks. As I understand it, Cambridge is a Delaware Limited Liability Company that was formed in June of 2014. It is operated through 5 managers, three preferred managers, Ms. Rebekah Mercer, Ms. Jennifer Mercer and Mr. Stephen Bannon, and two common managers, Mr. Alexander Nix and a person to be named. The three preferred managers are all United States citizens, Mr. Nix is not. Cambridge is primarily owned and controlled by US citizens, with SCL Elections Ltd., (“SCL”) a UK limited company being a minority owner. Moreover, certain intellectual property of SCL was licensed to Cambridge, which intellectual property Cambridge could use in its work as a US company in US elections, or other activities.

On the salient legal advice point, the memo concludes that US laws prohibiting foreign nationals managing campaigns — “including making direct or indirect decisions regarding the expenditure of campaign dollars” — will have “a significant impact on how Cambridge hires staff and operates in the short term”.

from Social – TechCrunch https://ift.tt/2uv3piT

via

IFTTT

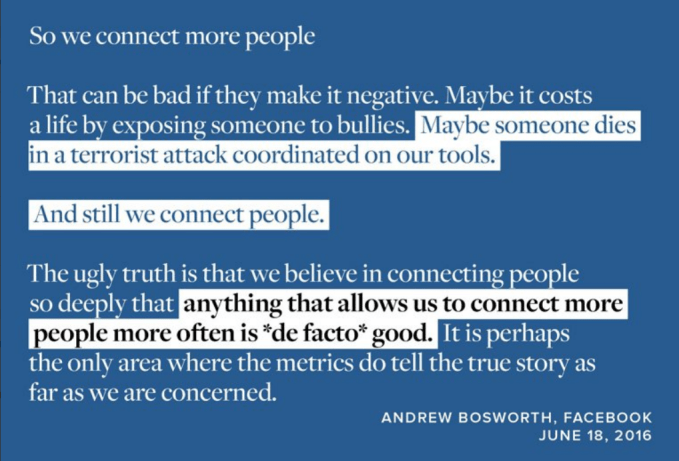

How to stop morale’s downward momentum will be one of Facebook’s greatest tests of leadership. This isn’t a bug to be squashed. It can’t just roll back a feature update. And an apology won’t suffice. It will have to expel or reeducate the leakers and disloyal without instilling a witchunt’s sense of dread. Compensation may have to jump upwards to keep talent aboard

How to stop morale’s downward momentum will be one of Facebook’s greatest tests of leadership. This isn’t a bug to be squashed. It can’t just roll back a feature update. And an apology won’t suffice. It will have to expel or reeducate the leakers and disloyal without instilling a witchunt’s sense of dread. Compensation may have to jump upwards to keep talent aboard

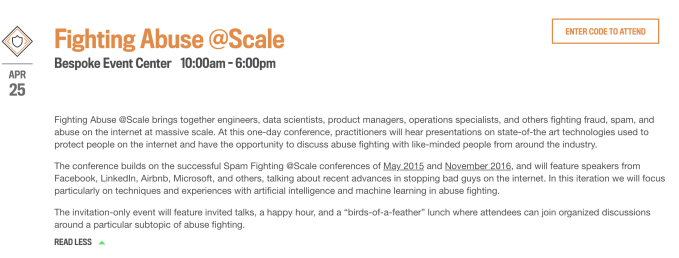

Fighting Abuse @Scale will be held at the Bespoke Event Center within the Westfield Mall in SF. We can expect more technical details about the new proactive artificial intelligence tools Facebook announced today during a conference call about

Fighting Abuse @Scale will be held at the Bespoke Event Center within the Westfield Mall in SF. We can expect more technical details about the new proactive artificial intelligence tools Facebook announced today during a conference call about