This is default featured slide 1 title

Go to Blogger edit html and find these sentences.Now replace these sentences with your own descriptions.This theme is Bloggerized by Lasantha Bandara - Premiumbloggertemplates.com.

This is default featured slide 2 title

Go to Blogger edit html and find these sentences.Now replace these sentences with your own descriptions.This theme is Bloggerized by Lasantha Bandara - Premiumbloggertemplates.com.

This is default featured slide 3 title

Go to Blogger edit html and find these sentences.Now replace these sentences with your own descriptions.This theme is Bloggerized by Lasantha Bandara - Premiumbloggertemplates.com.

This is default featured slide 4 title

Go to Blogger edit html and find these sentences.Now replace these sentences with your own descriptions.This theme is Bloggerized by Lasantha Bandara - Premiumbloggertemplates.com.

This is default featured slide 5 title

Go to Blogger edit html and find these sentences.Now replace these sentences with your own descriptions.This theme is Bloggerized by Lasantha Bandara - Premiumbloggertemplates.com.

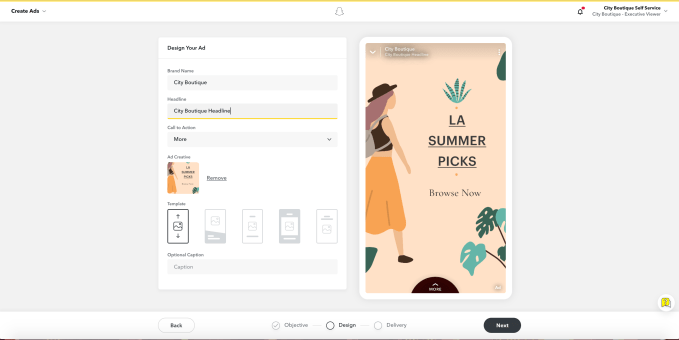

Snapchat launches ‘instant’ tool for creating vertical ads

Snapchat is hoping to attract new advertisers (and make advertising easier for the ones already on the platform) with the launch of a new tool called Instant Create.

Some of these potential advertisers may not be used to creating ads in the smartphone-friendly vertical format that Snapchat has popularized, so Instant Create is to designed to make the process as simple as possible.

Executives at parent organization Snap discussed the tool during last week’s earnings call (in which the company reported that its daily active users increased to 203 million).

“Just this month we started testing our new Instant Create on-boarding flow, which generates ads for businesses in three simple steps from their existing assets, be it their app or their ecommerce storefront,” said CEO Evan Spiegel.

Now the product is moving from testing to availability for all advertisers using Snapchat’s self-serve Ads Manager.

Those three steps that Spiegel mentioned involve identifying the objective of a campaign (website visits, app installs or app visits), entering you website address and finalizing you audience targeting.

You can upload your creative assets if you want, but that’s not required since Instant Create will also import images from your website. And Snap notes that you won’t need to do any real design work, because there’s “a streamlined ad creation flow that leverages our most popular templates and simplified ad detail options, enabling you to publish engaging creative without additional design resources.”

The goal is to make Snapchat advertisers accessible to smaller advertisers who may not have the time or resources to try to understand new ad formats. After all, on that same earnings call, Chief Business Officer Jeremi Gorman said, “We believe the single biggest driver for our revenue in the short to medium term will be increasing the number of active advertisers using Snapchat.”

Instant Create is currently focused Snapchat’s main ad format, Snap Ads. You can read more in the company’s blog post.

from Social – TechCrunch https://ift.tt/2OvkiEh

via IFTTT

Brittany Kaiser dumps more evidence of Brexit’s democratic trainwreck

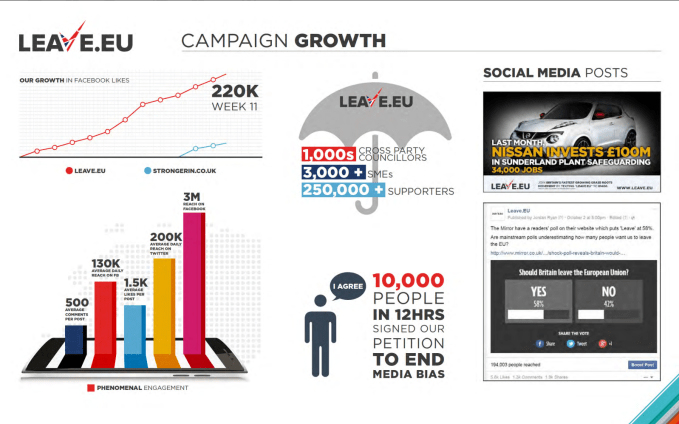

A UK parliamentary committee has published new evidence fleshing out how membership data was passed from UKIP, a pro-Brexit political party, to Leave.EU, a Brexit supporting campaign active in the 2016 EU referendum — via the disgraced and now defunct data company, Cambridge Analytica.

In evidence sessions last year, during the DCMS committee’s enquiry into online disinformation, it was told by both the former CEO of Cambridge Analytica, and the main financial backer of the Leave.EU campaign, the businessman Arron Banks, that Cambridge Analytica did no work for the Leave.EU campaign.

Documents published today by the committee clearly contradict that narrative — revealing internal correspondence about the use of a UKIP dataset to create voter profiles to carry out “national microtargeting” for Leave.EU.

They also show CA staff raising concerns about the legality of the plan to model UKIP data to enable Leave.EU to identify and target receptive voters with pro-Brexit messaging.

The UK’s 2016 in-out EU referendum saw the voting public narrowing voting to leave — by 52:48.

New evidence from Brittany Kaiser

The evidence, which includes emails between key Cambridge Analytica, employees of Leave.EU and UKIP, has been submitted to the DCMS committee by Brittany Kaiser — a former director of CA (who you may just have seen occupying a central role in Netflix’s The Great Hack documentary, which digs into links between the Trump campaign and the Brexit campaign).

We have just published new evidence from Brittany Kaiser relating to Cambridge Analytica, UKIP and Leave EU – you can read them all here @CommonsCMS https://t.co/2LjfJiozO6 #TheGreatHack

— Damian Collins (@DamianCollins) July 30, 2019

“As you can see with the evidence… chargeable work was completed for UKIP and Leave.EU, and I have strong reasons to believe that those datasets and analysed data processed by Cambridge Analytica as part of a Phase 1 payable work engagement… were later used by the Leave.EU campaign without Cambridge Analytica’s further assistance,” writes Kaiser in a covering letter to committee chair, Damian Collins, summarizing the submissions.

Kaiser gave oral evidence to the committee at a public hearing in April last year.

At the time she said CA had been undertaking parallel pitches for Leave.EU and UKIP — as well as for two insurance brands owned by Banks — and had used membership survey data provided by UKIP to built a model for pro-brexit voter personality types, with the intention of it being used “to benefit Leave.EU”.

“We never had a contract with Leave.EU. The contract was with the UK Independence party for the analysis of this data, but it was meant to benefit Leave.EU,” she said then.

The new emails submitted by Kaiser back up her earlier evidence. They also show there was discussion of drawing up a contract between CA, UKIP and Leave.EU in the fall before the referendum vote.

In one email — dated November 10, 2015 — CA’s COO & CFO, Julian Wheatland, writes that: “I had a call with [Leave.EU’s] Andy Wigmore today (Arron’s right hand man) and he confirmed that, even though we haven’t got the contract with the Leave written up, it’s all under control and it will happen just as soon as [UKIP-linked lawyer] Matthew Richardson has finished working out the correct contract structure between UKIP, CA and Leave.”

Another item Kaiser has submitted to the committee is a separate November email from Wigmore, inviting press to a briefing by Leave.EU — entitled “how to win the EU referendum” — an event at which Kaiser gave a pitch on CA’s work. In this email Wigmore describes the firm as “the worlds leading target voter messaging campaigners”.

In another document, CA’s Wheatland is shown in an email thread ahead of that presentation telling Wigmore and Richardson “we need to agree the line in the presentations next week with regards the origin of the data we have analysed”.

“We have generated some interesting findings that we can share in the presentation, but we are certain to be asked where the data came from. Can we declare that we have analysed UKIP membership and survey data?” he then asks.

UKIP’s Richardson replies with a negative, saying: “I would rather we didn’t, to be honest” — adding that he has a meeting with Wigmore to discuss “all of this”, and ending with: “We will have a plan by the end of that lunch, I think”.

In another email, dated November 10, sent to multiple recipients ahead of the presentation, Wheatland writes: “We need to start preparing Brittany’s presentation, which will involve working with some of the insights David [Wilkinson, CA’s chief data scientist] has been able to glean from the UKIP membership data.”

He also asks Wilkinson if he can start to “share insights from the UKIP data” — as well as asking “when are we getting the rest of the data?”. (In a later email, dated November 16, Wilkinson shares plots of modelled data with Kaiser — apparently showing the UKIP data now segmented into four blocks of brexit supporters, which have been named: ‘Eager activist’; ‘Young reformer’; ‘Disaffected Tories’; and ‘Left behinds’.)

In the same email Wheatland instructs Jordanna Zetter, an employee of CA’s parent company SCL, to brief Kaiser on “how to field a variety of questions about CA and our methodology, but also SCL. Rest of the world, SCL Defence etc” — asking her to liaise with other key SCL/CA staff to “produce some ‘line to take’ notes”.

Another document in the bundle appears to show Kaiser’s talking points for the briefing. These make no mention of CA’s intention to carry out “national microtargeting” for Leave.EU — merely saying it will conduct “message testing and audience segmentation”.

“We will be working with the campaign’s pollsters and other vendors to compile all the data we have available to us,” is another of the bland talking points Kaiser was instructed to feed to the press.

“Our team of data scientists will conduct deep-dive analysis that will enable us to understand the electorate better than the rival campaigns,” is one more unenlightening line intended for public consumption.

But while CA was preparing to present the UK media with a sanitized false narrative to gloss over the individual voter targeting work it actually intended to carry out for Leave.EU, behind the scenes concerns were being raised about how “national microtargeting” would conflict with UK data protection law.

Another email thread, started November 19, highlights internal discussion about the legality of the plan — with Wheatland sharing “written advice from Queen’s Counsel on the question of how we can legally process data in the UK, specifically UKIP’s data for Leave.eu and also more generally”. (Although Kaiser has not shared the legal advice itself.)

Wilkinson replies to this email with what he couches as “some concerns” regarding shortfalls in the advice, before going into detail on how CA is intending to further process the modelled UKIP data in order to individually microtarget brexit voters — which he suggests would not be legal under UK data protection law “as the identification of these people would constitute personal data”.

He writes:

I have some concerns about what this document says is our “output” – points 22 to 24. Whilst it includes what we have already done on their data (clustering and initial profiling of their members, and providing this to them as summary information), it does not say anything about using the models of the clusters that we create to extrapolate to new individuals and infer their profile. In fact it says that our output does not identify individuals. Thus it says nothing about our microtargeting approach typical in the US, which I believe was something that we wanted to do with leave eu data to identify how each their supporters should be contacted according to their inferred profile.

For example, we wouldn’t be able to show which members are likely to belong to group A and thus should be messaged in this particular way – as the identification of these people would constitute personal data. We could only say “group A typically looks like this summary profile”.

Wilkinson ends by asking for clarification ahead of a looming meeting with Leave.EU, saying: “It would be really useful to have this clarified early on tomorrow, because I was under the impression it would be a large part of our product offering to our UK clients.” [emphasis ours]

Wheatland follows up with a one line email, asking Richardson to “comment on David’s concern” — who then chips into the discussion, saying there’s “some confusion at our end about where this data is coming from and going to”.

He goes on to summarize the “premises” of the advice he says UKIP was given regarding sharing the data with CA (and afterwards the modelled data with Leave.EU, as he implies is the plan) — writing that his understanding is that CA will return: “Analysed Data to UKIP”, and then: “As the Analysed Dataset contains no personal data UKIP are free to give that Analysed Dataset to anyone else to do with what they wish. UKIP will give the Analysed Dataset to Leave.EU”.

“Could you please confirm that the above is correct?” Richardson goes on. “Do I also understand correctly that CA then intend to use the Analysed Dataset and overlay it on Leave.EU’s legitimately acquired data to infer (interpolate) profiles for each of their supporters so as to better control the messaging that leave.eu sends out to those supporters?

“Is it also correct that CA then intend to use the Analysed Dataset and overlay it on publicly available data to infer (interpolate) which members of the public are most likely to become Leave.EU supporters and what messages would encourage them to do so?

“If these understandings are not correct please let me know and I will give you a call to discuss this.”

About half an hour later another SCL Group employee, Peregrine Willoughby-Brown, joins the discussion to back up Wilkinson’s legal concerns.

“The [Queen’s Counsel] opinion only seems to be an analysis of the legality of the work we have already done for UKIP, rather than any judgement on whether or not we can do microtargeting. As such, whilst it is helpful to know that we haven’t already broken the law, it doesn’t offer clear guidance on how we can proceed with reference to a larger scope of work,” she writes without apparent alarm at the possibility that the entire campaign plan might be illegal under UK privacy law.

“I haven’t read it in sufficient depth to know whether or not it offers indirect insight into how we could proceed with national microtargeting, which it may do,” she adds — ending by saying she and a colleague will discuss it further “later today”.

It’s not clear whether concerns about the legality of the microtargeting plan derailed the signing of any formal contract between Leave.EU and CA — even though the documents imply data was shared, even if only during the scoping stage of the work.

“The fact remains that chargeable work was done by Cambridge Analytica, at the direction of Leave.EU and UKIP executives, despite a contract never being signed,” writes Kaiser in her cover letter to the committee on this. “Despite having no signed contract, the invoice was still paid, not to Cambridge Analytica but instead paid by Arron Banks to UKIP directly. This payment was then not passed onto Cambridge Analytica for the work completed, as an internal decision in UKIP, as their party was not the beneficiary of the work, but Leave.EU was.”

Kaiser has also shared a presentation of the UKIP survey data, which bears the names of three academics: Harold Clarke, University of Texas at Dallas & University of Essex; Matthew Goodwin, University of Kent; and Paul Whiteley, University of Essex, which details results from the online portion of the membership survey — aka the core dataset CA modelled for targeting Brexit voters with the intention of helping the Leave.EU campaign.

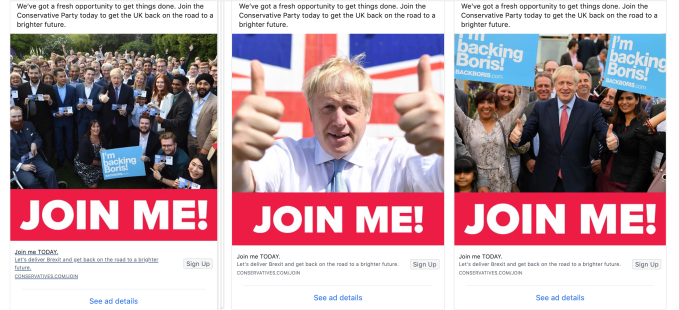

(At a glance, this survey suggests there’s an interesting analysis waiting to be done of the choice of target demographics for the current blitz of campaign message testing ads being run on Facebook by the new (pro-brexit) UK prime minister Boris Johnson and the core UKIP demographic, as revealed by the survey data… )

[gallery ids="1862050,1862051,1862052"]

Call for Leave.EU probe to be reopened

Ian Lucas, MP, a member of the DCMS committee has called for the UK’s Electoral Commission to re-open its investigation into Leave.EU in view of “additional evidence” from Kaiser.

The EC should re-open their investigation into LeaveEU in view of the additional evidence from Brittany Kaiser via @CommonsCMS

— Ian Lucas MP (@IanCLucas) July 30, 2019

We reached out to the Electoral Commission to ask if it will be revisiting the matter.

An Electoral Commission spokesperson told us: “We are considering this new information in relation to our role regulating campaigner activity at the EU referendum. This relates to the 10 week period leading up to the referendum and to campaigning activity specifically aimed at persuading people to vote for a particular outcome.

“Last July we did impose significant penalties on Leave.EU for committing multiple offences under electoral law at the EU Referendum, including for submitting an incomplete spending return.”

Last year the Electoral Commission also found that the official Vote Leave Brexit campaign broke the law by breaching election campaign spending limits. It channelled money to a Canadian data firm linked to Cambridge Analytica to target political ads on Facebook’s platform, via undeclared joint working with a youth-focused Brexit campaign, BeLeave.

Six months ago the UK’s data watchdog also issued fines against Leave.EU and Banks’ insurance company, Eldon Insurance — having found what it dubbed as “serious” breaches of electronic marketing laws, including the campaign using insurance customers’ details to unlawfully to send almost 300,000 political marketing messages.

A spokeswoman for the ICO told us it does not have a statement on Kaiser’s latest evidence but added that its enforcement team “will be reviewing the documents released by DCMS”.

The regulator has been running a wider enquiry into use of personal data for social media political campaigning. And last year the information commissioner called for an ethical pause on its use — warning that trust in democracy risked being undermined.

And while Facebook has since applied a thin film of ‘political ads’ transparency to its platform (which researches continue to warn is not nearly transparent enough to quantify political use of its ads platform), UK election campaign laws have yet to be updated to take account of the digital firehoses now (il)liberally shaping political debate and public opinion at scale.

It’s now more than three years since the UK’s shock vote to leave the European Union — a vote that has so far delivered three years of divisive political chaos, despatching two prime ministers and derailing politics and policymaking as usual.

Many questions remain over a referendum that continues to be dogged by scandals — from breaches of campaign spending; to breaches of data protection and privacy law; and indeed the use of unregulated social media — principally Facebook’s ad platform — as the willing conduit for distributing racist dogwhistle attack ads and political misinformation to whip up anti-EU sentiment among UK voters.

Dark money, dark ads — and the importing of US style campaign tactics into UK, circumventing election and data protection laws by the digital platform backdoor.

This is why the DCMS committee’s preliminary report last year called on the government to take “urgent action” to “build resilience against misinformation and disinformation into our democratic system”.

The very same minority government, struggling to hold itself together in the face of Brexit chaos, failed to respond to the committee’s concerns — and has now been replaced by a cadre of the most militant Brexit backers, who are applying their hands to the cheap and plentiful digital campaign levers.

The UK’s new prime minister, Boris Johnson, is demonstrably doubling down on political microtargeting: Appointing no less than Dominic Cummings, the campaign director of the official Vote Leave campaign, as a special advisor.

At the same time Johnson’s team is firing out a flotilla of Facebook ads — including ads that appear intended to gather voter sentiment for the purpose of crafting individually targeted political messages for any future election campaign.

So it’s full steam ahead with the Facebook ads…

Yet this ‘democratic reset’ is laid right atop the Brexit trainwreck. It’s coupled to it, in fact.

Cummings worked for the self same Vote Leave campaign that the Electoral Commission found illegally funnelled money — via Cambridge Analytica-linked Canadian data firm AggregateIQ — into a blitz of microtargeted Facebook ads intended to sway voter opinion.

Vote Leave also faced questions over its use of Facebook-run football competition promising a £50M prize-pot to fans in exchange for handing over a bunch of personal data ahead of the referendum, including how they planned to vote. Another data grab wrapped in fancy dress — much like GSR’s thisisyourlife quiz app that provided the foundational dataset for CA’s psychological voter profiling work on the Trump campaign.

The elevating of Cummings to be special adviser to the UK PM represents the polar opposite of an ‘ethical pause’ in political microtargeting.

Make no mistake, this is the Brexit campaign playbook — back in operation, now with full-bore pedal to the metal. (With his hands now on the public purse, Johnson has pledged to spend £100M on marketing to sell a ‘no deal Brexit’ to the UK public.)

Kaiser’s latest evidence may not contain a smoking bomb big enough to blast the issue of data-driven and tech giant-enabled voter manipulation into a mainstream consciousness, where it might have the chance to reset the political conscience of a nation — but it puts more flesh on the bones of how the self-styled ‘bad boys of Brexit’ pulled off their shock win.

In The Great Hack the Brexit campaign is couched as the ‘petri dish’ for the data-fuelled targeting deployed by the firm in the 2016 US presidential election — which delivered a similarly shock victory for Trump.

If that’s so, these latest pieces of evidence imply a suggestively close link between CA’s experimental modelling of UKIP supporter data, as it shifted gears to apply its dark arts closer to home than usual, and the models it subsequently built off of US citizens’ data sucked out of Facebook. And that in turn goes some way to explaining the cosiness between Trump and UKIP founder Nigel Farage…

So Donald Trump wants Nigel Farage on any trade negotiating team?

Maybe this is how #Brexit will end?

Not with a bang, not with a cliff edge, but with a great Tory shriek of revulsion?#nodealbrexit #ridge #marr #bbcbh #farage pic.twitter.com/iCn6hd9eka— carol hedges (@carolJhedges) July 28, 2019

Kaiser ends her letter to DCMS writing: “Given the enormity of the implications of earlier inaccurate conclusions by different investigations, I would hope that Parliament reconsiders the evidence submitted here in good faith. I hope that these ten documents are helpful to your research and furthering the transparency and truth that your investigations are seeking, and that the people of the UK and EU deserve”.

Banks and Wigmore have responded to the publication in their usual style, with a pair of dismissive tweets — questioning Kaiser’s motives for wanting the data to be published and throwing shade on how the evidence was obtained in the first place.

You mean the professional whistleblower who’s making a career of making stuff up with a book deal and failed Netflix film! The witch-hunt so last season !! https://t.co/f2rsPfoDdT

— Arron Banks (@Arron_banks) July 30, 2019

Desperate stuff @DamianCollins lol

know one cares – oh and are those emails obtained illegally – computer misuse act – tut tut stolen data, oh the irony https://t.co/BNWxUJmwoQ

— Andy Wigmore (@andywigmore) July 30, 2019

from Social – TechCrunch https://ift.tt/315tAbu

via IFTTT

A guide to Virtual Beings and how they impact our world

Money from big tech companies and top VC firms is flowing into the nascent “virtual beings” space. Mixing the opportunities presented by conversational AI, generative adversarial networks, photorealistic graphics, and creative development of fictional characters, “virtual beings” envisions a near-future where characters (with personalities) that look and/or sound exactly like humans are part of our day-to-day interactions.

Last week in San Francisco, entrepreneurs, researchers, and investors convened for the first Virtual Beings Summit, where organizer and Fable Studio CEO Edward Saatchi announced a grant program. Corporates like Amazon, Apple, Google, and Microsoft are pouring resources into conversational AI technology, chip-maker Nvidia and game engines Unreal and Unity are advancing real-time ray tracing for photorealistic graphics, and in my survey of media VCs one of the most common interests was “virtual influencers”.

The term “virtual beings” gets used as a catch-all categorization of activities that overlap here. There are really three separate fields getting conflated though:

- Virtual Companions

- Humanoid Character Creation

- Virtual Influencers

These can overlap — there are humanoid virtual influencers for example — but they represent separate challenges, separate business opportunities, and separate societal concerns. Here’s a look at these fields, including examples from the Virtual Beings Summit, and how they collectively comprise this concept of virtual beings:

Virtual companions

Virtual companions are conversational AI that build a unique 1-to-1 relationship with us, whether to provide friendship or utility. A virtual companion has personality, gauges the personality of the user, retains memory of prior conversations, and uses all that to converse with humans like a fellow human would. They seem to exist as their own being even if we rationally understand they are not.

Virtual companions can exist across 4 formats:

- Physical presence (Robotics)

- Interactive visual media (social media, gaming, AR/VR)

- Text-based messaging

- Interactive voice

While pop culture depictions of this include Her and Ex Machina, nascent real-world examples are virtual friend bots like Hugging Face and Replika as well as voice assistants like Amazon’s Alexa and Apple’s Siri. The products currently on the market aren’t yet sophisticated conversationalists or adept at engaging with us as emotional creatures but they may not be far off from that.

from Social – TechCrunch https://ift.tt/2K7D1QI

via IFTTT

Facebook and YouTube’s moderation failure is an opportunity to deplatform the platforms

Facebook, YouTube, and Twitter have failed their task of monitoring and moderating the content that appears on their sites; what’s more, they failed to do so well before they knew it was a problem. But their incidental cultivation of fringe views is an opportunity to recast their role as the services they should be rather than the platforms they have tried so hard to become.

The struggles of these juggernauts should be a spur to innovation elsewhere: While the major platforms reap the bitter harvest of years of ignoring the issue, startups can pick up where they left off. There’s no better time to pass someone up as when they’re standing still.

Asymmetrical warfare: Is there a way forward?

At the heart of the content moderation issue is a simple cost imbalance that rewards aggression by bad actors while punishing the platforms themselves.

To begin with, there is the problem of defining bad actors in the first place. This is a cost that must be borne from the outset by the platform: With the exception of certain situations where they can punt (definitions of hate speech or groups for instance), they are responsible for setting the rules on their own turf.

That’s a reasonable enough expectation. But carrying it out is far from trivial; you can’t just say “here’s the line; don’t cross it or you’re out.” It is becoming increasingly clear that these platforms have put themselves in an uncomfortable lose-lose situation.

If they have simple rules, they spend all their time adjudicating borderline cases, exceptions, and misplaced outrage. If they have more granular ones, there is no upper limit on the complexity and they spend all their time defining it to fractal levels of detail.

Both solutions require constant attention and an enormous, highly-organized and informed moderation corps, working in every language and region. No company has shown any real intention to take this on — Facebook famously contracts the responsibility out to shabby operations that cut corners and produce mediocre results (at huge human and monetary cost); YouTube simply waits for disasters to happen and then quibbles unconvincingly.

from Social – TechCrunch https://ift.tt/2K4XcjD

via IFTTT

Europe’s top court sharpens guidance for sites using leaky social plug-ins

Europe’s top court has made a ruling that could affect scores of websites that embed the Facebook ‘Like’ button and receive visitors from the region.

The ruling by the Court of Justice of the EU states such sites are jointly responsible for the initial data processing — and must either obtain informed consent from site visitors prior to data being transferred to Facebook, or be able to demonstrate a legitimate interest legal basis for processing this data.

The ruling is significant because, as currently seems to be the case, Facebook’s Like buttons transfer personal data automatically, when a webpage loads — without the user even needing to interact with the plug-in — which means if websites are relying on visitors’ ‘consenting’ to their data being shared with Facebook they will likely need to change how the plug-in functions to ensure no data is sent to Facebook prior to visitors being asked if they want their browsing to be tracked by the adtech giant.

The background to the case is a complaint against online clothes retailer, Fashion ID, by a German consumer protection association, Verbraucherzentrale NRW — which took legal action in 2015 seeking an injunction against Fashion ID’s use of the plug-in which it claimed breached European data protection law.

Like ’em or loath ’em, Facebook’s ‘Like’ buttons are an impossible-to-miss component of the mainstream web. Though most Internet users are likely unaware that the social plug-ins are used by Facebook to track what other websites they’re visiting for ad targeting purposes.

Last year the company told the UK parliament that between April 9 and April 16 the button had appeared on 8.4M websites, while its Share button social plug-in appeared on 931K sites. (Facebook also admitted to 2.2M instances of another tracking tool it uses to harvest non-Facebook browsing activity — called a Facebook Pixel — being invisibly embedded on third party websites.)

The Fashion ID case predates the introduction of the EU’s updated privacy framework, GDPR, which further toughens the rules around obtaining consent — meaning it must be purpose specific, informed and freely given.

Today’s CJEU decision also follows another ruling a year ago, in a case related to Facebook fan pages, when the court took a broad view of privacy responsibilities around platforms — saying both fan page administrators and host platforms could be data controllers. Though it also said joint controllership does not necessarily imply equal responsibility for each party.

In the latest decision the CJEU has sought to draw some limits on the scope of joint responsibility, finding that a website where the Facebook Like button is embedded cannot be considered a data controller for any subsequent processing, i.e. after the data has been transmitted to Facebook Ireland (the data controller for Facebook’s European users).

The joint responsibility specifically covers the collection and transmission of Facebook Like data to Facebook Ireland.

“It seems, at the outset, impossible that Fashion ID determines the purposes and means of those operations,” the court writes in a press release announcing the decision.

“By contrast, Fashion ID can be considered to be a controller jointly with Facebook Ireland in respect of the operations involving the collection and disclosure by transmission to Facebook Ireland of the data at issue, since it can be concluded (subject to the investigations that it is for the Oberlandesgericht Düsseldorf [German regional court] to carry out) that Fashion ID and Facebook Ireland determine jointly the means and purposes of those operations.”

Responding the judgement in a statement attributed to its associate general counsel, Jack Gilbert, Facebook told us:

Website plugins are common and important features of the modern Internet. We welcome the clarity that today’s decision brings to both websites and providers of plugins and similar tools. We are carefully reviewing the court’s decision and will work closely with our partners to ensure they can continue to benefit from our social plugins and other business tools in full compliance with the law.

The company said it may make changes to the Like button to ensure websites that use it are able to comply with Europe’s GDPR.

Though it’s not clear what specific changes these could be, such as — for example — whether Facebook will change the code of its social plug-ins to ensure no data is transferred at the point a page loads. (We’ve asked Facebook and will update this report with any response.)

Facebook also points out that other tech giants, such as Twitter and LinkedIn, deploy similar social plug-ins — suggesting the CJEU ruling will apply to other social platforms, as well as to thousands of websites across the EU where these sorts of plug-ins crop up.

“Sites with the button should make sure that they are sufficiently transparent to site visitors, and must make sure that they have a lawful basis for the transfer of the user’s personal data (e.g. if just the user’s IP address and other data stored on the user’s device by Facebook cookies) to Facebook,” Neil Brown, a telecoms, tech and internet lawyer at U.K. law firm Decoded Legal, told TechCrunch.

“If their lawful basis is consent, then they’ll need to get consent before deploying the button for it to be valid — otherwise, they’ll have done the transfer before the visitor has consented

“If relying on legitimate interests — which might scrape by — then they’ll need to have done a legitimate interests assessment, and kept it on file (against the (admittedly unlikely) day that a regulator asks to see it), and they’ll need to have a mechanism by which a site visitor can object to the transfer.”

“Basically, if organisations are taking on board the recent guidance from the ICO and CNIL on cookie compliance, wrapping in Facebook ‘Like’ and other similar things in with that work would be sensible,” Brown added.

Also commenting on the judgement, Michael Veale, a UK-based researcher in tech and privacy law/policy, said it raises questions about how Facebook will comply with Europe’s data protection framework for any further processing it carries out of the social plug-in data.

“The whole judgement to me leaves open the question ‘on what grounds can Facebook justify further processing of data from their web tracking code?'” he told us. “If they have to provide transparency for this further processing, which would take them out of joint controllership into sole controllership, to whom and when is it provided?

“If they have to demonstrate they would win a legitimate interests test, how will that be affected by the difficulty in delivering that transparency to data subjects?’

“Can Facebook do a backflip and say that for users of their service, their terms of service on their platform justifies the further use of data for which individuals must have separately been made aware of by the website where it was collected?

“The question then quite clearly boils down to non-users, or to users who are effectively non-users to Facebook through effective use of technologies such as Mozilla’s browser tab isolation.”

How far a tracking pixel could be considered a ‘similar device’ to a cookie is another question to consider, he said.

The tracking of non-Facebook users via social plug-ins certainly continues to be a hot-button legal issue for Facebook in Europe — where the company has twice lost in court to Belgium’s privacy watchdog on this issue. (Facebook has continued to appeal.)

Facebook founder Mark Zuckerberg also faced questions about tracking non-users last year, from MEPs in the European Parliament — who pressed him on whether Facebook uses data on non-users for any other uses vs the security purpose of “keeping bad content out” that he claimed requires Facebook to track everyone on the mainstream Internet.

MEPs also wanted to know how non-users can stop their data being transferred to Facebook? Zuckerberg gave no answer, likely because there’s currently no way for non-users to stop their data being sucked up by Facebook’s servers — short of staying off the mainstream Internet.

from Social – TechCrunch https://ift.tt/3116CC1

via IFTTT

The Great Hack tells us data corrupts

This week professor David Carroll, whose dogged search for answers to how his personal data was misused plays a focal role in The Great Hack: Netflix’s documentary tackling the Facebook-Cambridge Analytica data scandal, quipped that perhaps a follow up would be more punitive for the company than the $5BN FTC fine released the same day.

The documentary — which we previewed ahead of its general release Wednesday — does an impressive job of articulating for a mainstream audience the risks for individuals and society of unregulated surveillance capitalism, despite the complexities involved in the invisible data ‘supply chain’ that feeds the beast. Most obviously by trying to make these digital social emissions visible to the viewer — as mushrooming pop-ups overlaid on shots of smartphone users going about their everyday business, largely unaware of the pervasive tracking it enables.

Facebook is unlikely to be a fan of the treatment. In its own crisis PR around the Cambridge Analytica scandal it has sought to achieve the opposite effect; making it harder to join the data-dots embedded in its ad platform by seeking to deflect blame, bury key details and bore reporters and policymakers to death with reams of irrelevant detail — in the hope they might shift their attention elsewhere.

Data protection itself isn’t a topic that naturally lends itself to glamorous thriller treatment, of course. No amount of slick editing can transform the close and careful scrutiny of political committees into seat-of-the-pants viewing for anyone not already intimately familiar with the intricacies being picked over. And yet it’s exactly such thoughtful attention to detail that democracy demands. Without it we are all, to put it proverbially, screwed.

The Great Hack shows what happens when vital detail and context are cheaply ripped away at scale, via socially sticky content delivery platforms run by tech giants that never bothered to sweat the ethical detail of how their ad targeting tools could be repurposed by malign interests to sew social discord and/or manipulate voter opinion en mass.

Or indeed used by an official candidate for high office in a democratic society that lacks legal safeguards against data misuse.

But while the documentary packs in a lot over an almost two-hour span, retelling the story of Cambridge Analytica’s role in the 2016 Trump presidential election campaign; exploring links to the UK’s Brexit leave vote; and zooming out to show a little of the wider impact of social media disinformation campaigns on various elections around the world, the viewer is left with plenty of questions. Not least the ones Carroll repeats towards the end of the film: What information had Cambridge Analytica amassed on him? Where did they get it from? What did they use it for? — apparently resigning himself to never knowing. The disgraced data firm chose declaring bankruptcy and folding back into its shell vs handing over the stolen goods and its algorithmic secrets.

There’s no doubt over the other question Carroll poses early on the film — could he delete his information? The lack of control over what’s done with people’s information is the central point around which the documentary pivots. The key warning being there’s no magical cleansing fire that can purge every digitally copied personal thing that’s put out there.

And while Carroll is shown able to tap into European data rights — purely by merit of Cambridge Analytica having processed his data in the UK — to try and get answers, the lack of control holds true in the US. Here, the absence of a legal framework to protect privacy is shown as the catalyzing fuel for the ‘great hack’ — and also shown enabling the ongoing data-free-for-all that underpins almost all ad-supported, Internet-delivered services. tl;dr: Your phone doesn’t need to listen to if it’s tracking everything else you do with it.

The film’s other obsession is the breathtaking scale of the thing. One focal moment is when we hear another central character, Cambridge Analytica’s Brittany Kaiser, dispassionately recounting how data surpassed oil in value last year — as if that’s all the explanation needed for the terrible behavior on show.

“Data’s the most valuable asset on Earth,” she monotones. The staggering value of digital stuff is thus fingered as an irresistible, manipulative force also sucking in bright minds to work at data firms like Cambridge Analytica — even at the expense of their own claimed political allegiances, in the conflicted case of Kaiser.

If knowledge is power and power corrupts, the construction can be refined further to ‘data corrupts’, is the suggestion.

The filmmakers linger long on Kaiser which can seem to humanize her — as they show what appear vulnerable or intimate moments. Yet they do this without ever entirely getting under her skin or allowing her role in the scandal to be fully resolved.

She’s often allowed to tell her narrative from behind dark glasses and a hat — which has the opposite effect on how we’re invited to perceive her. Questions about her motivations are never far away. It’s a human mystery linked to Cambridge Analytica’s money-minting algorithmic blackbox.

Nor is there any attempt by the filmmakers to mine Kaiser for answers themselves. It’s a documentary that spotlights mysteries and leaves questions hanging up there intact. From a journalist perspective that’s an inevitable frustration. Even as the story itself is much bigger than any one of its constituent parts.

It’s hard to imagine how Netflix could commission a straight up sequel to The Great Hack, given its central framing of Carroll’s data quest being combined with key moments of the Cambridge Analytica scandal. Large chunks of the film are comprised from capturing scrutiny and reactions to the story unfolding in real-time.

But in displaying the ruthlessly transactional underpinnings of social platforms where the world’s smartphone users go to kill time, unwittingly trading away their agency in the process, Netflix has really just begun to open up the defining story of our time.

from Social – TechCrunch https://ift.tt/2OofRuT

via IFTTT

Twitter Q2 beats on sales of $841M and EPS of $0.20, new metric of mDAUs up to 139M

Two days after Facebook reported growing numbers (even amid its regulatory turmoil), its social media counterpart Twitter today announced its Q2 results. The company made $841 million in overall revenues, up 18% on a year ago; with EPS and net income respectively at $1.43 and $1.1 billion, a huge bump due to a “significant income tax benefit” related to the establishment of a deferred tax asset for corporate structuring for certain geographies, Twitter said.

Without that, non-GAAP diluted EPS was $0.20 on non-GAAP adjusted net income of $156 million.

Monetizable Daily Active Users — Twitter’s new, preferred audience metric — is now at 139 million, which Twitter says is up 14% on a year ago.

The figures beat on revenues and edged out estimates for EPS: Analysts were expecting earnings per share of around $0.19 on revenues of just over $829 million for the quarter. A year ago, Twitter posted an EPS of $0.17 on sales of $710.5 million, and last quarter, the company handily beat analyst expectations on sales of $787 million and diluted EPS of $0.25.

GAAP operating income for the quarter was $76 million, down from $80 million a year ago.

The U.S. continues to be Twitter’s revenue engine, the company said. It accounted for $455 million of its sales, up 24%, while international revenue was $386 million, up just 12%. Japan continues to be Twitter’s No. 2 market, up 9% and accounting for $133 million of its overall sales.

Meanwhile, advertising continues to be the most important revenue stream for the company (one reason why mDAUs is now its preferred metric, too). It made $727 million in advertising revenues in Q2, up 21% on a year ago. Twitter noted that video ad formats “continued to show strength,” singling out its Video Website Card, In-Stream Video Ads and First View ads. Data licensing, the other component of Twitter’s business model, was $114 million, up just 4%.

One of the more notable figures in this latest report is a new metric called “monetizable daily active users,” which Twitter has introduced to replace monthly and daily active users; mDAUs is based on Twitter users who logged in or were “otherwise authenticated and accessed Twitter on any given day through twitter.com or Twitter applications that are able to show ads,” according to the company.

The advertising aspect is the key part: Twitter’s previous metrics, the more established MAU and DAU figures that other companies typically provide, did not distinguish which users were served ads, and which were not.

Twitter’s argument has been that MAUs and DAUs are not a great picture of the company’s business prospects because of that fact, and so it announced some time ago that it would stop reporting these figures, moving instead to mDAUs.

Be that as it may, it’s notable that the MAU figure had been a problematic one for Twitter: in the last quarter, the company’s MAUs were 330 million, a drop of 6 million users compared to a year ago, and people had been using the generally sluggish growth (and sometimes decline) of those numbers to underscore the contention that Twitter had a growth problem.

Moving to mDAUs is a way for Twitter to de-emphasize that view and to put a spotlight on more encouraging numbers: those that show Twitter is increasing its advertising base. Nevertheless, Twitter acknowledges that it’s not standard, and so harder to use as comparable against anything other than Twitter itself. “Our calculation of mDAU is not based on any standardized industry methodology and is not necessarily calculated in the same manner or comparable to similarly titled measures presented by other companies,” it noted in a recent letter to shareholders.

The company is still relatively young, and continues to tinker and make changes — some big, some small — to both its back end and user interface. Some have been made to address some of the larger issues that people have been (often critically) vocal about, such as coping with harassment or making the site more user-friendly for power-Tweeters, new adopters and everyone in between. Others are to continue building Twitter as a business, which means making it more advertising and media-partner friendly.

Not all the changes are always positive. There’s been a fair amount of backlash over the company’s new desktop design, which it introduced this month and features a much wider section of the page dedicated to the main news feed. I’m guessing this is in part to lay the groundwork for featuring larger media files, which should help it continue growing revenues in those areas. Indeed, this week Twitter announced a deal to stream Olympics coverage, likely helped by showing NBC that it is making efforts to make the experience a more pleasing one for Twitter users, but it’s not all about entertainment: the larger news feed will also help Twitter sell more advertising, too. It will be interesting to see how and if it proves to be a headwind in future quarters.

Updated with EPS figures based on non-GAAP diluted net income provided by Twitter in a separate note to TechCrunch (figures that it, frustratingly, didn’t publish in the actual shareholders’ letter).

from Social – TechCrunch https://ift.tt/2Zj9dqQ

via IFTTT

Muzmatch adds $7M to swipe right on Muslim majority markets

Muzmatch, a matchmaking app for Muslims, has just swiped a $7 million Series A on the back of continued momentum for its community sensitive approach to soulmate searching for people of the Islamic faith.

It now has more than 1.5M users of its apps, across 210 countries, swiping, matching and chatting online as they try to find ‘the one’.

The funding, which Muzmatch says will help fuel growth in key international markets, is jointly led by US hedge fund Luxor Capital, and Silicon Valley accelerator Y Combinator — the latter having previously selected Muzmatch for its summer 2017 batch of startups.

Last year the team also took in a $1.75M seed, led by Fabrice Grinda’s FJ Labs, YC and others.

We first covered the startup two years ago when its founders were just graduating from YC. At that time there were two of them building the business: Shahzad Younas and Ryan Brodie — a perhaps unlikely pairing in this context, given Brodie’s lack of a Muslim background. He joined after meeting Younas, who had earlier quit his job as an investment banker to launch Muzmatch. Brodie got excited by the idea and early traction for the MVP. The pair went on to ship a relaunch of the app in mid 2016 which helped snag them a place at YC.

So why did Younas and Brodie unmatch? All the remaining founder can say publicly is that its investors are buying Brodie’s stake. (While, in a note on LinkedIn — celebrating what he dubs the “bittersweet” news of Muzmatch’s Series A — Brodie writes: “Separate to this raise I decided to sell my stake in the company. This is not from a lack of faith — on the contrary — it’s simply the right time for me to move on to startup number 4 now with the capital to take big risks.”)

Asked what’s harder, finding a steady co-founder or finding a life partner, Younas responds with a laugh. “With myself and Ryan, full credit, when we first joined together we did commit to each other, I guess, a period of time of really going for it,” he ventures, reaching for the phrase “conscious uncoupling” to sum up how things went down. “We both literally put blood sweat and tears into the app, into growing what it is. And for sure without him we wouldn’t be as far as we are now, that’s definitely true.”

“For me it’s a fantastic outcome for him. I’m genuinely super happy for him. For someone of his age and at that time of his life — now he’s got the ability to start another startup and back himself, which is amazing. Not many people have that opportunity,” he adds.

Younas says he isn’t looking for another co-founder at this stage of the business. Though he notes they have just hired a CTO — “purely because there’s so much to do that I want to make sure I’ve got a few people in certain areas”.

The team has grown from just four people seven months ago to 17 now. With the Series A the plan is to further expand headcount to almost 30.

“In terms of a co-founder, I don’t think, necessarily, at this point it’s needed,” Younas tells TechCrunch. “I obviously understand this community a lot. I’ve equally grown in terms of my role in the company and understanding various parts of the company. You get this experience by doing — so now I think definitely it helps having the simplicity of a single founder and really guiding it along.”

Despite the co-founders parting ways that’s no doubting Muzmatch’s momentum. Aside from solid growth of its user base (it was reporting ~200k two years ago), its press release touts 30,000+ “successes” worldwide — which Younas says translates to people who have left the app and told it they did so because they met someone on Muzmatch.

He reckons at least half of those left in order to get married — and for a matchmaking app that is the ultimate measure of success.

“Everywhere I go I’m meeting people who have met on Muzmatch. It has been really transformative for the Muslim community where we’ve taken off — and it is amazing to see, genuinely,” he says, suggesting the real success metric is “much higher because so many people don’t tell us”.

Nor is he worried about being too successful, despite 100 people a day leaving because they met someone on the app. “For us that’s literally the best thing that can happen because we’ve grown mostly by word of mouth — people telling their friends I met someone on your app. Muslim weddings are quite big, a lot of people attend and word does spread,” he says.

Muzmatch was already profitable two years ago (and still is, for “some” months, though that’s not been a focus), which has given it leverage to focus on growing at a pace it’s comfortable with as a young startup. But the plan with the Series A cash is to accelerate growth by focusing attention internationally on Muslim majority markets vs an early focus on markets, including the UK and the US, with Muslim minority populations.

This suggests potential pitfalls lie ahead for the team to manage growth in a sustainable way — ensuring scaling usage doesn’t outstrip their ability to maintain the ‘safe space’ feel the target users need, while at the same time catering to the needs of an increasingly diverse community of Muslim singles.

“We’re going to be focusing on Muslim majority countries where we feel that they would be more receptive to technology. There’s slightly less of a taboo around finding someone online. There’s culture changes already happening, etc.,” he says, declining to name the specific markets they’ll be fixing on. “That’s definitely what we’re looking for initially. That will obviously allow us to scale in a big way going forward.

“We’ve always done [marketing] in a very data-driven way,” he adds, discussing his approach to growth. “Up til now I’ve led on that. Pretty much everything in this company I’ve self taught. So I learnt, essentially, how to build a growth engine, how to scale an optimize campaigns, digital spend, and these big guys have seen our data and they’re impressed with the progress we’ve made, and the customer acquisition costs that we’ve achieved — considering we really are targeting quite a niche market… Up til now we closed our Series A with more than half our seed round in our accounts.”

Muzmatch has also laid the groundwork for the planned international push, having already fully localized the app — which is live in 14 languages, including right to left languages like Arabic.

“We’re localized and we get a lot of organic users everywhere but obviously once you focus on a particular area — in terms of content, in terms of your brand etc — then it really does start to take off,” adds Younas.

The team’s careful catering to the needs of its target community — via things like manual moderation of every profile and offering an optional chaperoning feature for in-app chats — i.e. rather than just ripping out a ‘Tinder for Muslims’ clone, can surely take some credit for helping to grow the market for Muslim matchmaking apps overall.

“Shahzad has clearly made something that people want. He is a resourceful founder who has been listening to his users and in the process has developed an invaluable service for the Muslim community, in a way that mainstream companies have failed to do,” says YC partner Tim Brady in a supporting statement.

But the flip side of attracting attention and spotlighting a commercial opportunity means Muzmatch now faces increased competition — such as from the likes of Dubai-based Veil: A rival matchmaking app which has recently turned heads with a ‘digital veil’ feature that applies an opaque filter to all profile photos, male and female, until a mutual match is made.

Muzmatch also lets users hide their photos, if they choose. But it has resisted imposing a one-size-fits-all template on the user experience — exactly in order that it can appeal more broadly, regardless of the user’s level of religious adherence (it has even attracted non-Muslim users with a genuine interest in meeting a life partner).

Younas says he’s not worried about fresh faces entering the same matchmaking app space — couching it as a validation of the market.

He’s also dismissive of gimmicky startups that can often pass through the dating space, usually on a fast burn to nowhere. Though he is expecting more competition from major players, such as Tinder-owner Match, which he notes has been eyeing up some of the same geographical markets.

“We know there’s going to be attention in this area,” he says. “Our goal is to basically continue to be the dominant player but for us to race ahead in terms of the quality of our product offering and obviously our size. That’s the goal. Having this investment definitely gives us that ammo to really go for it. But by the same token I’d never want us to be that silly startup that just burns a tonne of money and ends up nowhere.”

“It’s a very complex population, it’s very diverse in terms of culture, in terms of tradition,” he adds of the target market. “We so far have successfully been able to navigate that — of creating a product that does, to the user, marries technology with respecting the faith.”

Feature development is now front of mind for Muzmatch as it moves into the next phase of growth, and as — Younas hopes — it has more time to focus on finessing what its product offers, having bagged investment by proving product market fit and showing traction.

“The first thing that we’re going to be doing is an actual refreshing of our brand,” he says. “A bit of a rebrand, keeping the same name, a bit of a refresh of our brand, tidying that up. Actually refreshing the app, top to bottom. Part of that is looking at changes that have happened in the — call it — ‘dating space’. Because what we’ve always tried to do is look at the good that’s happening, get rid of the bad stuff, and try and package it and make it applicable to a Muslim audience.

“I think that’s what we’ve done really well. And I always wanted to innovate on that — so we’ve got a bunch of ideas around a complete refresh of the app.”

Video is one area they’re experimenting with for future features. TechCrunch’s interview with Younas takes place via a video chat using what looks to be its own videoconferencing platform, though there’s not currently a feature in Muzmatch that lets users chat remotely via video.

Its challenge on this front will be implementing richer comms features in a way that a diverse community of religious users can accept.

“I want to — and we have this firmly on our roadmap, and I hope that it’s within six months — be introducing or bringing ways to connect people on our platform that they’ve never been able to do before. That’s going to be key. Elements of video is going to be really interesting,” says Younas teasing their thinking around video.

“The key for us is how do we do [videochat] in a way that is sensible and equally gives both sides control. That’s the key.”

Nor will it just be “simple video”. He says they’re also looking at how they can use profile data more creatively, especially for helping more private users connect around shared personality traits.

“There’s a lot of things we want to do within the app of really showing the richness of our profiles. One thing that we have that other apps don’t have are profiles that are really rich. So we have about 22 different data points on the profile. There’s a lot that people do and want to share. So the goal for us is how do we really try and show that off?

“We have a segment of profiles where the photos are private, right, people want that anonymity… so the goal for us is then saying how can we really show your personality, what you’re about in a really good way. And right now I would argue we don’t quite do it well enough. We’ve got a tonne of ideas and part of the rebrand and the refresh will be really emphasizing and helping that segment of society who do want to be private but equally want people to understand what they’re about.”

Where does he want the business to be in 12 months’ time? With a more polished product and “a lot of key features in the way of connecting the community around marriage — or just community in general”.

In terms of growth the aim is at least 4x where they are now.

“These are ambitious targets. Especially given the amount that we want to re-engineer and rebuild but now is the time,” he adds. “Now we have the fortune of having a big team, of having the investment. And really focusing and finessing our product… Really give it a lot of love and really give it a lot of the things we’ve always wanted to do and never quite had the time to do. That’s the key.

“I’m personally super excited about some of the stuff coming up because it’s a big enabler — growing the team and having the ability to really execute on this a lot faster.”

from Social – TechCrunch https://ift.tt/2K3ROfm

via IFTTT

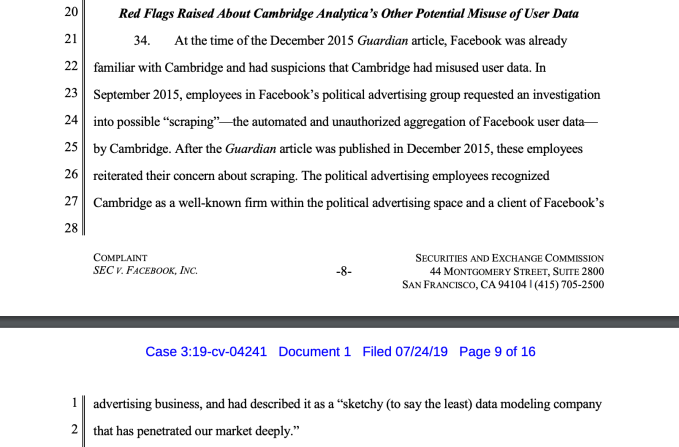

Facebook ignored staff warnings about “sketchy” Cambridge Analytica in September 2015

Facebook employees tried to alert the company about the activity of Cambridge Analytica as early as September 2015, per the SEC’s complaint against the company which was published yesterday.

This chimes with a court filing that emerged earlier this year — which also suggested Facebook knew of concerns about the controversial data company earlier than it had publicly said, including in repeat testimony to a UK parliamentary committee last year.

Facebook only finally kicked the controversial data firm off its ad platform in March 2018 when investigative journalists had blown the lid off the story.

In a section of the SEC complaint on “red flags” raised about the scandal-hit company Cambridge Analytica’s potential misuse of Facebook user data, the SEC complaint reveals that it already knew of concerns raised by staffers in its political advertising unit — who described CA as a “sketchy (to say the least) data modeling company that has penetrated our market deeply”.

Amid a flurry of major headlines for the company yesterday, including a $5BN FTC fine — all of which was selectively dumped on the same day media attention was focused on Mueller’s testimony before Congress — Facebook quietly disclosed it had also agreed to pay $100M to the SEC to settle a complaint over failures to properly disclose data abuse risks to its investors.

This tidbit was slipped out towards the end of a lengthy blog post by Facebook general counsel Colin Stretch which focused on responding to the FTC order with promises to turn over a new leaf on privacy.

CEO Mark Zuckerberg also made no mention of the SEC settlement in his own Facebook note about what he dubbed a “historic fine”.

As my TC colleague Devin Coldewey wrote yesterday, the FTC settlement amounts to a ‘get out of jail’ card for the company’s senior execs by granting them blanket immunity from known and unknown past data crimes.

‘Historic fine’ is therefore quite the spin to put on being rich enough and powerful enough to own the rule of law.

And by nesting its disclosure of the SEC settlement inside effusive privacy-washing discussion of the FTC’s “historic” action, Facebook looks to be hoping to detract attention from some really awkward details in its narrative about the Cambridge Analytica scandal which highlight ongoing inconsistencies and contradictions to put it politely.

The SEC complaint underlines that Facebook staff were aware of the dubious activity of Cambridge Analytica on its platform prior to the December 2015 Guardian story — which CEO Mark Zuckerberg has repeatedly claimed was when he personally became aware of the problem.

Asked about the details in the SEC document, a Facebook spokesman pointed us to comments it made earlier this year when court filings emerged that also suggested staff knew in September 2015. In this statement, from March, it says “employees heard speculation that Cambridge Analytica was scraping data, something that is unfortunately common for any internet service”, and further claims it was “not aware of the transfer of data from Kogan/GSR to Cambridge Analytica until December 2015”, adding: “When Facebook learned about Kogan’s breach of Facebook’s data use policies, we took action.”

Facebook staffers were also aware of concerns about Cambridge Analytica’s “sketchy” business when, around November 2015, Facebook employed psychology researcher Joseph Chancellor — aka the co-founder of app developer GSR — which, as Facebook has sought to pain it, is the ‘rogue’ developer that breached its platform policies by selling Facebook user data to Cambridge Analytica.

This means Facebook employed a man who had breached its own platform policies by selling user data to a data company which Facebook’s own staff had urged, months prior, be investigated for policy-violating scraping of Facebook data, per the SEC complaint.

Fast forward to March 2018 and press reports revealing the scale and intent of the Cambridge Analytica data heist blew up into a global data scandal for Facebook, wiping billions off its share price.

The really awkward question that Facebook has continued not to answer — and which every lawmaker, journalist and investor should therefore be putting to the company at every available opportunity — is why it employed GSR co-founder Chancellor in the first place?

Chancellor has never been made available by Facebook to the media for questions. He also quietly left Facebook last fall — we must assume with a generous exit package in exchange for his continued silence. (Assume because neither Facebook nor Chancellor have explained how he came to be hired.)

At the time of his departure, Facebook also made no comment on the reasons for Chancellor leaving — beyond confirming he had left.

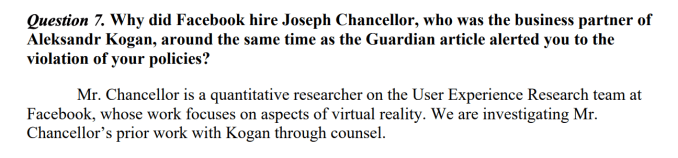

Facebook has never given a straight answer on why it hired Chancellor. See, for example, its written response to a Senate Commerce Committee’s question — which is pure, textbook misdirection, responding with irrelevant details that do not explain how Facebook came to identify him for a role at the company in the first place (“Mr. Chancellor is a quantitative researcher on the User Experience Research team at Facebook, whose work focuses on aspects of virtual reality. We are investigating Mr. Chancellor’s prior work with Kogan through counsel”).

What was the outcome of Facebook’s internal investigation of Chancellor’s prior work? We don’t know because again Facebook isn’t saying anything.

More importantly, the company has continued to stonewall on why it hired someone intimately linked to a massive political data scandal that’s now just landed it an “historic fine”.

We asked Facebook to explain why it hired Chancellor — given what the SEC complaint shows it knew of Cambridge Analytica’s “sketchy” dealings — and got the same non-answer in response: “Mr Chancellor was a quantitative researcher on the User Experience Research team at Facebook, whose work focused on aspects of virtual reality. He is no longer employed by Facebook.”

We’ve asked Facebook to clarify why Chancellor was hired despite internal staff concerns linked to the company his company was set up to sell Facebook data to; and how of all possible professionals it could hire Facebook identified Chancellor in the first place — and will update this post with any response. (A search for ‘quantitative researcher’ on LinkedIn’s platform returns more than 177,000 results of professional who are using the descriptor in their profiles.)

Earlier this month a UK parliamentary committee accused the company of contradicting itself in separate testimonies on both sides of the Atlantic over knowledge of improper data access by third-party apps.

The committee grilled multiple Facebook and Cambridge Analytica employees (and/or former employees) last year as part of a wide-ranging enquiry into online disinformation and the use of social media data for political campaigning — calling in its final report for Facebook to face privacy and antitrust probes.

A spokeswoman for the DCMS committee told us it will be writing to Facebook next week to ask for further clarification of testimonies given last year in light of the timeline contained in the SEC complaint.

Under questioning in Congress last year, Facebook founder Zuckerberg also personally told congressman Mike Doyle that Facebook had first learned about Cambridge Analytica using Facebook data as a result of the December 2015 Guardian article.

Yet, as the SEC complaint underlines, Facebook staff had raised concerns months earlier. So, er, awkward.

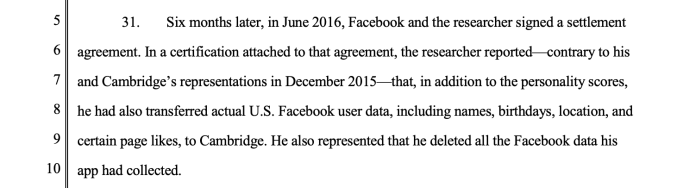

There are more awkward details in the SEC complaint that Facebook seems keen to bury too — including that as part of a signed settlement agreement, GSR’s other co-founder Aleksandr Kogan told it in June 2016 that he had, in addition to transferring modelled personality profile data on 30M Facebook users to Cambridge Analytica, sold the latter “a substantial quantity of the underlying Facebook data” on the same set of individuals he’d profiled.

This US Facebook user data included personal information such as names, location, birthdays, gender and a sub-set of page likes.

Raw Facebook data being grabbed and sold does add some rather colorful shading around the standard Facebook line — i.e. that its business is nothing to do with selling user data. Colorful because while Facebook itself might not sell user data — it just rents access to your data and thereby sells your attention — the company has built a platform that others have repurposed as a marketplace for exactly that, and done so right under its nose…

The SEC complaint also reveals that more than 30 Facebook employees across different corporate groups learned of Kogan’s platform policy violations — including senior managers in its comms, legal, ops, policy and privacy divisions.

The UK’s data watchdog previously identified three senior managers at Facebook who it said were involved in email exchanges prior to December 2015 regarding the GSR/Cambridge Analytica breach of Facebook users data, though it has not made public the names of the staff in question.

The SEC complaint suggests a far larger number of Facebook staffers knew of concerns about Cambridge Analytica earlier than the company narrative has implied up to now. Although the exact timeline of when all the staffers knew is not clear from the document — with the discussed period being September 2015 to April 2017.

Despite 30+ Facebook employees being aware of GSR’s policy violation and misuse of Facebook data — by April 2017 at the latest — the company leaders had put no reporting structures in place for them to be able to pass the information to regulators.

“Facebook had no specific policies or procedures in place to assess or analyze this information for the purposes of making accurate disclosures in Facebook’s periodic filings,” the SEC notes.

The complaint goes on to document various additional “red flags” it says were raised to Facebook throughout 2016 suggesting Cambridge Analytica was misusing user data — including various press reports on the company’s use of personality profiles to target ads; and staff in Facebook’s own political ads unit being aware that the company was naming Facebook and Instagram ad audiences by personality trait to certain clients, including advocacy groups, a commercial enterprise and a political action committee.

“Despite Facebook’s suspicions about Cambridge and the red flags raised after the Guardian article, Facebook did not consider how this information should have informed the risk disclosures in its periodic filings about the possible misuse of user data,” the SEC adds.

from Social – TechCrunch https://ift.tt/2MeugHd

via IFTTT

Daily Crunch: Facebook will pay $5B fine

The Daily Crunch is TechCrunch’s roundup of our biggest and most important stories. If you’d like to get this delivered to your inbox every day at around 9am Pacific, you can subscribe here.

1. Facebook settles with FTC: $5 billion and new privacy guarantees

Although in line with what was reported before the official announcement, the FTC notes this is the largest fine for any company violating consumer privacy.

In addition to the payment, Facebook has agreed to new oversight, with a board committee on privacy covering WhatsApp and Instagram, as well as Facebook itself.

2. Netflix launches Rs 199 ($2.80) mobile-only monthly plan in India

Netflix has a new plan to win users in India: make the entry point to its service incredibly cheap. The new tier restricts the usage to one mobile device, with standard definition viewing.

3. DOJ announces investigation into big tech

More regulatory fun! In a statement, the DOJ said that it will consider “widespread concerns that consumers, businesses, and entrepreneurs have expressed about search, social media and some retail services online.”

Camping site with a caravan and a four wheel drive parked under a tree by the Darling River in Australia.

4. Andreessen Horowitz values camping business Hipcamp at $127M

The San Francisco-based startup provides a “people-powered platform” that unlocks access to private land for camping, glamping or just a beautiful spot to park your RV.

5. Google intros Gallery Go offline photo editor

The new product joins a suite of Google apps created specifically for users in development markets, where solid online connections aren’t always a given.

6. Tile finds another $45M to expand its item-tracking devices and platform

Tile makes popular square-shaped tags to help people keep track of physical belongings like keys and bags. Recently, it’s been linking up with chipmakers to expand into wireless headsets and other electronics.

7. Digging into the Roblox growth strategy

After 15 years, the company has accumulated 90 million users and a new $150 million venture funding war chest. (Extra Crunch membership required.)

from Social – TechCrunch https://ift.tt/2y6om2J

via IFTTT