If you’re a UX designer you won’t need this article to tell you about dark pattern design. But perhaps you chose to tap here out of a desire to reaffirm what you already know — to feel good about your professional expertise.

Or was it that your conscience pricked you? Go on, you can be honest… or, well, can you?

A third possibility: Perhaps an app you were using presented this article in a way that persuaded you to tap on it rather than on some other piece of digital content. And it’s those sorts of little imperceptible nudges — what to notice, where to tap/click — that we’re talking about when we talk about dark pattern design.

But not just that. The darkness comes into play because UX design choices are being selected to be intentionally deceptive. To nudge the user to give up more than they realize. Or to agree to things they probably wouldn’t if they genuinely understood the decisions they were being pushed to make.

To put it plainly, dark pattern design is deception and dishonesty by design… Still sitting comfortably?

The technique, as it’s deployed online today, often feeds off and exploits the fact that content-overloaded consumers skim-read stuff they’re presented with, especially if it looks dull and they’re in the midst of trying to do something else — like sign up to a service, complete a purchase, get to something they actually want to look at, or find out what their friends have sent them.

Manipulative timing is a key element of dark pattern design. In other words when you see a notification can determine how you respond to it. Or if you even notice it. Interruptions generally pile on the cognitive overload — and deceptive design deploys them to make it harder for a web user to be fully in control of their faculties during a key moment of decision.

Dark patterns used to obtain consent to collect users’ personal data often combine unwelcome interruption with a built in escape route — offering an easy way to get rid of the dull looking menu getting in the way of what you’re actually trying to do.

Brightly colored ‘agree and continue’ buttons are a recurring feature of this flavor of dark pattern design. These eye-catching signposts appear near universally across consent flows — to encourage users not to read or contemplate a service’s terms and conditions, and therefore not to understand what they’re agreeing to.

It’s ‘consent’ by the spotlit backdoor.

This works because humans are lazy in the face of boring and/or complex looking stuff. And because too much information easily overwhelms. Most people will take the path of least resistance. Especially if it’s being reassuringly plated up for them in handy, push-button form.

At the same time dark pattern design will ensure the opt out — if there is one — will be near invisible; Greyscale text on a grey background is the usual choice.

Some deceptive designs even include a call to action displayed on the colorful button they do want you to press — with text that says something like ‘Okay, looks great!’ — to further push a decision.

Likewise, the less visible opt out option might use a negative suggestion to imply you’re going to miss out on something or are risking bad stuff happening by clicking here.

The horrible truth is that deceptive designs can be awfully easy to paint.

Where T&Cs are concerned, it really is shooting fish in a barrel. Because humans hate being bored or confused and there are countless ways to make decisions look off-puttingly boring or complex — be it presenting reams of impenetrable legalese in tiny greyscale lettering so no-one will bother reading it combined with defaults set to opt in when people click ‘ok’; deploying intentionally confusing phrasing and/or confusing button/toggle design that makes it impossible for the user to be sure what’s on and what’s off (and thus what’s opt out and what’s an opt in) or even whether opting out might actually mean opting into something you really don’t want…

Friction is another key tool of this dark art: For example designs that require lots more clicks/taps and interactions if you want to opt out. Such as toggles for every single data share transaction — potentially running to hundreds of individual controls a user has to tap on vs just a few taps or even a single button to agree to everything. The weighing is intentionally all one way. And it’s not in the consumer’s favor.

Deceptive designs can also make it appear that opting out is not even possible. Such as default opting users in to sharing their data and, if they try to find a way to opt out, requiring they locate a hard-to-spot alternative click — and then also requiring they scroll to the bottom of lengthy T&Cs to unearth a buried toggle where they can in fact opt out.

Facebook used that technique to carry out a major data heist by linking WhatsApp users’ accounts with Facebook accounts in 2016. Despite prior claims that such a privacy u-turn could never happen. The vast majority of WhatsApp users likely never realized they could say no — let alone understood the privacy implications of consenting to their accounts being linked.

Ecommerce sites also sometimes suggestively present an optional (priced) add-on in a way that makes it appear like an obligatory part of the transaction. Such as using a brightly colored ‘continue’ button during a flight check out process but which also automatically bundles an optional extra like insurance, instead of plainly asking people if they want to buy it.

Or using pre-selected checkboxes to sneak low cost items or a small charity donation into a basket when a user is busy going through the check out flow — meaning many customers won’t notice it until after the purchase has been made.

Airlines have also been caught using deceptive design to upsell pricier options, such as by obscuring cheaper flights and/or masking prices so it’s harder to figure out what the most cost effective choice actually is.

Dark patterns to thwart attempts to unsubscribe are horribly, horribly common in email marketing. Such as an unsubscribe UX that requires you to click a ridiculous number of times and keep reaffirming that yes, you really do want out.

Often these additional screens are deceptively designed to resembled the ‘unsubscribe successful’ screens that people expect to see when they’ve pulled the marketing hooks out. But if you look very closely, at the typically very tiny lettering, you’ll see they’re actually still asking if you want to unsubscribe. The trick is to get you not to unsubscribe by making you think you already have.

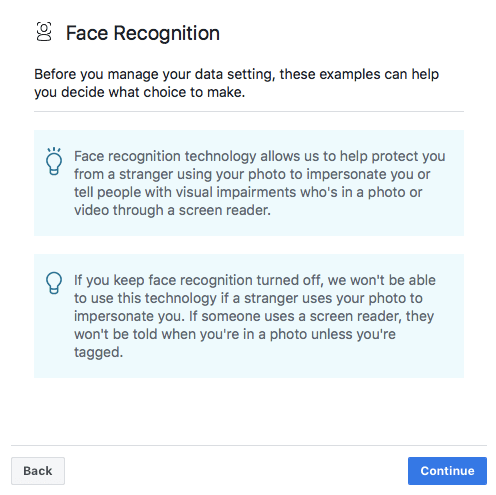

Another oft-used deceptive design that aims to manipulate online consent flows works against users by presenting a few selectively biased examples — which gives the illusion of helpful context around a decision. But actually this is a turbocharged attempt to manipulate the user by presenting a self-servingly skewed view that is in no way a full and balanced picture of the consequences of consent.

At best it’s disingenuous. More plainly it’s deceptive and dishonest.

Here’s just one example of selectively biased examples presented during a Facebook consent flow used to encourage European users to switch on its face recognition technology. Clicking ‘continue’ leads the user to the decision screen — but only after they’ve been shown this biased interstitial…

Facebook is also using emotional manipulation here, in the wording of its selective examples, by playing on people’s fears (claiming its tech will “help protect you from a stranger”) and playing on people’s sense of goodwill (claiming your consent will be helpful to people with visual impairment) — to try to squeeze agreement by making people feel fear or guilt.

You wouldn’t like this kind of emotionally manipulative behavior if a human was doing it to you. But Facebook frequently tries to manipulate its users’ feelings to get them to behave how it wants.

For instance to push users to post more content — such as by generating an artificial slideshow of “memories” from your profile and a friend’s profile, and then suggesting you share this unasked for content on your timeline (pushing you to do so because, well, what’s your friend going to think if you choose not to share it?). Of course this serves its business interests because more content posted to Facebook generates more engagement and thus more ad views.

Or — in a last ditch attempt to prevent a person from deleting their account — Facebook has been known to use the names and photos of their Facebook friends to claim such and such a person will “miss you” if you leave the service. So it’s suddenly conflating leaving Facebook with abandoning your friends.

Distraction is another deceptive design technique deployed to sneak more from the user than they realize. For example cutesy looking cartoons that are served up to make you feel warn and fluffy about a brand — such as when they’re periodically asking you to review your privacy settings.

Again, Facebook uses this technique. The cartoony look and feel around its privacy review process is designed to make you feel reassured about giving the company more of your data.

You could even argue that Google’s entire brand is a dark pattern design: Childishly colored and sounding, it suggests something safe and fun. Playful even. The feelings it generates — and thus the work it’s doing — bear no relation to the business the company is actually in: Surveillance and people tracking to persuade you to buy things.

Another example of dark pattern design: Notifications that pop up just as you’re contemplating purchasing a flight or hotel room, say, or looking at a pair of shoes — which urge you to “hurry!” as there’s only X number of seats or pairs left.

This plays on people’s FOMO, trying to rush a transaction by making a potential customer feel like they don’t have time to think about it or do more research — and thus thwart the more rational and informed decision they might otherwise have made.

The kicker is there’s no way to know if there really was just two seats left at that price. Much like the ghost cars Uber was caught displaying in its app — which it claimed were for illustrative purposes, rather than being exactly accurate depictions of cars available to hail — web users are left having to trust what they’re being told is genuinely true.

But why should you trust companies that are intentionally trying to mislead you?

Dark patterns point to an ethical vacuum

The phrase dark pattern design is pretty antique in Internet terms, though you’ll likely have heard it being bandied around quite a bit of late. Wikipedia credits UX designer Harry Brignull with the coinage, back in 2010, when he registered a website (darkpatterns.org) to chronicle and call out the practice as unethical.

“Dark patterns tend to perform very well in A/B and multivariate tests simply because a design that tricks users into doing something is likely to achieve more conversions than one that allows users to make an informed decision,” wrote Brignull in 2011 — highlighting exactly why web designers were skewing towards being so tricksy: Superficially it works. The anger and mistrust come later.

Close to a decade later, Brignull’s website is still valiantly calling out deceptive design. So perhaps he should rename this page ‘the hall of eternal shame’. (And yes, before you point it out, you can indeed find brands owned by TechCrunch’s parent entity Oath among those being called out for dark pattern design… It’s fair to say that dark pattern consent flows are shamefully widespread among media entities, many of which aim to monetize free content with data-thirsty ad targeting.)

Of course the underlying concept of deceptive design has roots that run right through human history. See, for example, the original Trojan horse. (A sort of ‘reverse’ dark pattern design — given the Greeks built an intentionally eye-catching spectacle to pique the Trojan’s curiosity, getting them to lower their guard and take it into the walled city, allowing the fatal trap to be sprung.)

Basically, the more tools that humans have built, the more possibilities they’ve found for pulling the wool over other people’s eyes. The Internet just kind of supercharges the practice and amplifies the associated ethical concerns because deception can be carried out remotely and at vast, vast scale. Here the people lying to you don’t even have to risk a twinge of personal guilt because they don’t have to look into your eyes while they’re doing it.

Nowadays falling foul of dark pattern design most often means you’ll have unwittingly agreed to your personal data being harvested and shared with a very large number of data brokers who profit from background trading people’s information — without making it clear they’re doing so nor what exactly they’re doing to turn your data into their gold. So, yes, you are paying for free consumer services with your privacy.

Another aspect of dark pattern design has been bent towards encouraging Internet users to form addictive habits attached to apps and services. Often these kind of addiction forming dark patterns are less visually obvious on a screen — unless you start counting the number of notifications you’re being plied with, or the emotional blackmail triggers you’re feeling to send a message for a ‘friendversary’, or not miss your turn in a ‘streak game’.

This is the Nir Eyal ‘hooked’ school of product design. Which has actually run into a bit of a backlash of late, with big tech now competing — at least superficially — to offer so-called ‘digital well-being’ tools to let users unhook. Yet these are tools the platforms are still very much in control of. So there’s no chance you’re going to be encouraged to abandon their service altogether.

Dark pattern design can also cost you money directly. For example if you get tricked into signing up for or continuing a subscription you didn’t really want. Though such blatantly egregious subscription deceptions are harder to get away with. Because consumers soon notice they’re getting stung for $50 a month they never intended to spend.

That’s not to say ecommerce is clean of deceptive crimes now. The dark patterns have generally just got a bit more subtle. Pushing you to transact faster than you might otherwise, say, or upselling stuff you don’t really need.

Although consumers will usually realize they’ve been sold something they didn’t want or need eventually. Which is why deceptive design isn’t a sustainable business strategy, even setting aside ethical concerns.

In short, it’s short term thinking at the expense of reputation and brand loyalty. Especially as consumers now have plenty of online platforms where they can vent and denounce brands that have tricked them. So trick your customers at your peril.

That said, it takes longer for people to realize their privacy is being sold down the river. If they even realize at all. Which is why dark pattern design has become such a core enabling tool for the vast, non-consumer facing ad tech and data brokering industry that’s grown fat by quietly sucking on people’s data — thanks to the enabling grease of dark pattern design.

Think of it as a bloated vampire octopus wrapped invisibly around the consumer web, using its myriad tentacles and suckers to continuously manipulate decisions and close down user agency in order to keep data flowing — with all the A/B testing techniques and gamification tools it needs to win.

“It’s become substantially worse,” agrees Brignull, discussing the practice he began critically chronicling almost a decade ago. “Tech companies are constantly in the international news for unethical behavior. This wasn’t the case 5-6 years ago. Their use of dark patterns is the tip of the iceberg. Unethical UI is a tiny thing compared to unethical business strategy.”

“UX design can be described as the way a business chooses to behave towards its customers,” he adds, saying that deceptive web design is therefore merely symptomatic of a deeper Internet malaise.

He argues the underlying issue is really about “ethical behavior in US society in general”.

The deceitful obfuscation of commercial intention certainly runs all the way through the data brokering and ad tech industries that sit behind much of the ‘free’ consumer Internet. Here consumers have plainly been kept in the dark so they cannot see and object to how their personal information is being handed around, sliced and diced, and used to try to manipulate them.

From an ad tech perspective, the concern is that manipulation doesn’t work when it’s obvious. And the goal of targeted advertising is to manipulate people’s decisions based on intelligence about them gleaned via clandestine surveillance of their online activity (so inferring who they are via their data). This might be a purchase decision. Equally it might be a vote.

The stakes have been raised considerably now that data mining and behavioral profiling are being used at scale to try to influence democratic processes.

So it’s not surprising that Facebook is so coy about explaining why a certain user on its platform is seeing a specific advert. Because if the huge surveillance operation underpinning the algorithmic decision to serve a particular ad was made clear, the person seeing it might feel manipulated. And then they would probably be less inclined to look favorably upon the brand they were being urged to buy. Or the political opinion they were being pushed to form. And Facebook’s ad tech business stands to suffer.

The dark pattern design that’s trying to nudge you to hand over your personal information is, as Birgnull says, just the tip of a vast and shadowy industry that trades on deception and manipulation by design — because it relies on the lie that people don’t care about their privacy.

But people clearly do care about privacy. Just look at the lengths to which ad tech entities go to obfuscate and deceive consumers about how their data is being collected and used. If people don’t mind companies spying on them, why not just tell them plainly it’s happening?

And if people were really cool about sharing their personal and private information with anyone, and totally fine about being tracked everywhere they go and having a record kept of all the people they know and have relationships with, why would the ad tech industry need to spy on them in the first place? They could just ask up front for all your passwords.

The deception enabled by dark pattern design not only erodes privacy but has the chilling effect of putting web users under pervasive, clandestine surveillance, it also risks enabling damaging discrimination at scale. Because non-transparent decisions made off of the back of inferences gleaned from data taken without people’s consent can mean that — for example — only certain types of people are shown certain types of offers and prices, while others are not.

Facebook was forced to make changes to its ad platform after it was shown that an ad-targeting category it lets advertisers target ads against, called ‘ethnic affinity’ — aka Facebook users whose online activity indicates an interest in “content relating to particular ethnic communities” — could be used to run housing and employment ads that discriminate against protected groups.

More recently the major political ad scandals relating to Kremlin-backed disinformation campaigns targeting the US and other countries via Facebook’s platform, and the massive Facebook user data heist involving the controversial political consultancy Cambridge Analytica deploying quiz apps to improperly suck out people’s data in order to build psychographic profiles for political ad targeting, has shone a spotlight on the risks that flow from platforms that operate by systematically keeping their users in the dark.

As a result of these scandals, Facebook has started offering a level of disclosure around who is paying for and running some of the ads on its platform. But plenty of aspects of its platform and operations remain shrouded. Even those components that are being opened up a bit are still obscured from view of the majority of users — thanks to the company’s continued use of dark patterns to manipulate people into acceptance without actual understanding.

And yet while dark pattern design has been the slickly successful oil in the engines of the ad tech industry for years, allowing it to get away with so much consent-less background data processing, gradually, gradually some of the shadier practices of this sector are being illuminated and shut down — including as a consequence of shoddy security practices, with so many companies involved in the trading and mining of people’s data. There are just more opportunities for data to leak.

Laws around privacy are also being tightened. And changes to EU data protection rules are a key reason why dark pattern design has bubbled back up into online conversations lately. The practice is under far greater legal threat now as GDPR tightens the rules around consent.

This week a study by the Norwegian Consumer Council criticized Facebook and Google for systematically deploying design choices that nudge people towards making decisions which negatively affect their own privacy — such as data sharing defaults, and friction injected into the process of opting out so that fewer people will.

Another manipulative design decision flagged by the report is especially illustrative of the deceptive levels to which companies will stoop to get users to do what they want — with the watchdog pointing out how Facebook paints fake red dots onto its UI in the midst of consent decision flows in order to encourage the user to think they have a message or a notification. Thereby rushing people to agree without reading any small print.

Fair and ethical design is design that requires people to opt in affirmatively to any actions that benefit the commercial service at the expense of the user’s interests. Yet all too often it’s the other way around: Web users have to go through sweating toil and effort to try to safeguard their information or avoid being stung for something they don’t want.

You might think the types of personal data that Facebook harvests are trivial — and so wonder what’s the big deal if the company is using deceptive design to obtain people’s consent? But the purposes to which people’s information can be put are not at all trivial — as the Cambridge Analytica scandal illustrates.

One of Facebook’s recent data grabs in Europe also underlines how it’s using dark patterns on its platform to attempt to normalize increasingly privacy hostile technologies.

Earlier this year it began asking Europeans for consent to processing their selfies for facial recognition purposes — a highly controversial technology that regulatory intervention in the region had previously blocked. Yet now, as a consequence of Facebook’s confidence in crafting manipulative consent flows, it’s essentially figured out a way to circumvent EU citizens’ fundamental rights — by socially engineering Europeans to override their own best interests.

Nor is this type of manipulation exclusively meted out to certain, more tightly regulated geographies; Facebook is treating all its users like this. European users just received its latest set of dark pattern designs first, ahead of a global rollout, thanks to the bloc’s new data protection regulation coming into force on May 25.

CEO Mark Zuckerberg even went so far as to gloat about the success of this deceptive modus operandi on stage at a European conference in May — claiming the “vast majority” of users were “willingly” opting in to targeted advertising via its new consent flow.

In truth the consent flow is manipulative, and Facebook does not even offer an absolute opt out of targeted advertising on its platform. The ‘choice’ it gives users is to agree to its targeted advertising or to delete their account and leave the service entirely. Which isn’t really a choice when balanced against the power of Facebook’s platform and the network effect it exploits to keep people using its service.

‘Forced consent‘ is an early target for privacy campaign groups making use of GDPR opening the door, in certain EU member states, to collective enforcement of individuals’ data rights.

Of course if you read Facebook or Google’s PR around privacy they claim to care immensely — saying they give people all the controls they need to manage and control access to their information. But controls with dishonest instructions on how to use them aren’t really controls at all. And opt outs that don’t exist smell rather more like a lock in.

Platforms certainly remain firmly in the driving seat because — until a court tells them otherwise — they control not just the buttons and levers but the positions, sizes, colors, and ultimately the presence or otherwise of the buttons and levers.

And because these big tech ad giants have grown so dominant as services they are able to wield huge power over their users — even tracking non-users over large swathes of the rest of the Internet, and giving them even fewer controls than the people who are de facto locked in, even if, technically speaking, service users might be able to delete an account or abandon a staple of the consumer web.

Big tech platforms can also leverage their size to analyze user behavior at vast scale and A/B test the dark pattern designs that trick people the best. So the notion that users have been willingly agreeing en masse to give up their privacy remains the big lie squatting atop the consumer Internet.

People are merely choosing the choice that’s being pre-selected for them.

That’s where things stand as is. But the future is looking increasingly murky for dark pattern design.

Change is in the air.

What’s changed is there are attempts to legally challenge digital disingenuousness, especially around privacy and consent. This after multiple scandals have highlighted some very shady practices being enabled by consent-less data-mining — making both the risks and the erosion of users’ rights clear.

Europe’s GDPR has tightened requirements around consent — and is creating the possibility of redress via penalties worth the enforcement. It has already caused some data-dealing businesses to pull the plug entirely or exit Europe.

New laws with teeth make legal challenges viable, which was simply not the case before. Though major industry-wide change will take time, as it will require waiting for judges and courts to rule.

“It’s a very good thing,” says Brignull of GDPR. Though he’s not yet ready to call it the death blow that deceptive design really needs, cautioning: “We’ll have to wait to see whether the bite is as strong as the bark.”

In the meanwhile, every data protection scandal ramps up public awareness about how privacy is being manhandled and abused, and the risks that flow from that — both to individuals (e.g. identity fraud) and to societies as a whole (be it election interference or more broadly attempts to foment harmful social division).

So while dark pattern design is essentially ubiquitous with the consumer web of today, the deceptive practices it has been used to shield and enable are on borrowed time. The direction of travel — and the direction of innovation — is pro-privacy, pro-user control and therefore anti-deceptive-design. Even if the most embedded practitioners are far too vested to abandon their dark arts without a fight.

What, then, does the future look like? What is ‘light pattern design’? The way forward — at least where privacy and consent are concerned — must be user centric. This means genuinely asking for permission — using honesty to win trust by enabling rather than disabling user agency.

Designs must champion usability and clarity, presenting a genuine, good faith choice. Which means no privacy-hostile defaults: So opt ins, not opt outs, and consent that is freely given because it’s based on genuine information not self-serving deception, and because it can also always be revoked at will.

Design must also be empathetic. It must understand and be sensitive to diversity — offering clear options without being intentionally overwhelming. The goal is to close the perception gap between what’s being offered and what the customer thinks they’re getting.

Those who want to see a shift towards light patterns and plain dealing also point out that online transactions honestly achieved will be happier and healthier for all concerned — because they will reflect what people actually want. So rather than grabbing short term gains deceptively, companies will be laying the groundwork for brand loyalty and organic and sustainable growth.

The alternative to the light pattern path is also clear: Rising mistrust, rising anger, more scandals, and — ultimately — consumers abandoning brands and services that creep them out and make them feel used. Because no one likes feeling exploited. And even if people don’t delete an account entirely they will likely modify how they interact, sharing less, being less trusting, less engaged, seeking out alternatives that they do feel good about using.

Also inevitable if the mass deception continues: More regulation. If businesses don’t behave ethically on their own, laws will be drawn up to force change.

Because sure, you can trick people for a while. But it’s not a sustainable strategy. Just look at the political pressure now being piled on Zuckerberg by US and EU lawmakers. Deception is the long game that almost always fails in the end.

The way forward must be a new ethical deal for consumer web services — moving away from business models that monetize free access via deceptive data grabs.

This means trusting your users to put their faith in you because your business provides an innovative and honest service that people care about.

It also means rearchitecting systems to bake in privacy by design. Blockchain-based micro-payments may offer one way of opening up usage-based revenue streams that can offer an alternative or supplement to ads.

Where ad tech is concerned, there are also some interesting projects being worked on — such as the blockchain-based Brave browser which is aiming to build an ad targeting system that does local, on-device targeting (only needing to know the user’s language and a broad-brush regional location), rather than the current, cloud-based ad exchange model that’s built atop mass surveillance.

Technologists are often proud of their engineering ingenuity. But if all goes to plan, they’ll have lots more opportunities to crow about what they’ve built in future — because they won’t be too embarrassed to talk about it.

from Social – TechCrunch https://ift.tt/2yZFvhP

via IFTTT

0 comments:

Post a Comment