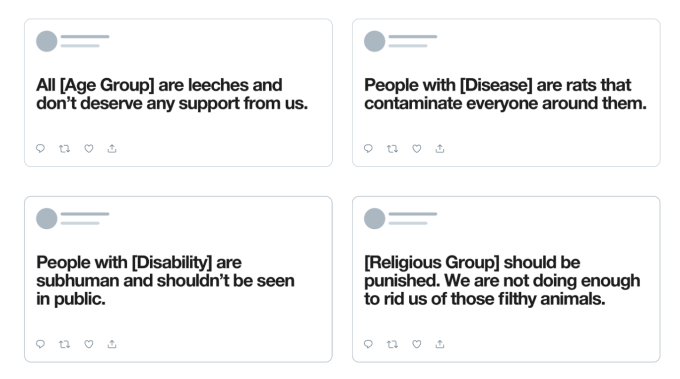

Last year, Twitter expanded its rules around hate speech to include dehumanizing speech against religious groups. Today, Twitter says it’s expanding its rule to also include language that dehumanizes people on the basis of their age, disability, or disease. The latter, of course, is a timely addition given that the spread of the novel coronavirus COVID-19 has led to people making hateful and sometimes racist remarks on Twitter related to this topic.

Twitter says that tweets that broke this rule before today will need to be deleted, but those won’t result in any account suspensions because the rules were not in place at that time. However, any tweets published from now on will now have to abide by Twitter’s updated hateful conduct policy. This overarching policy includes rules about hateful conduct — meaning promoting violence or making threats — as well as the use of hateful imagery and display names.

The policy already includes a ban on dehumanizing speech across a range of categories, including also race, ethnicity, national origin, caste, sexual orientation, gender, and gender identity. The policy has expanded over time as Twitter has tried to better encompass the many areas where it wants to ban hateful speech and conduct on its platform.

One issue with Twitter’s hateful conduct policy is that it’s not able to keep up with enforcement due to the volume of tweets that are posted. In addition, its reliance on having users flag tweets for review means hate speech removal is handled reactively, rather than proactively. Twitter has also been heavily criticized for not properly enforcing its policies and allowing the online abuse to continue.

In today’s announcement, Twitter freely admits to these and other problems. It also notes it has since done more in-depth training and extended its testing period to ensure reviewers better understand how and when to take action, as well as how to protect conversations within marginalized groups. And it created a Trust and Safety Council to help it better understand the nuances and context around complex categories, like race, ethnicity and national origin.

Unfortunately, Twitter’s larger problem is that it has operated for years as a public town square where users have felt relatively free to express themselves without using a real identity where they’re held accountable for their words and actions. There are valid cases for online anonymity — including how it allows people to more freely communicate under oppressive regimes, for example. But the flip side is that it emboldens some people to make statements that they otherwise wouldn’t — and without any social repercussions. That’s not how it works in the real world.

Plus, any time Twitter tries to clamp down on hateful speech and conduct, it’s accused of clamping down on free speech — as if its social media platform is a place that’s protected by the U.S. Constitution’s First Amendment. According to the U.S. courts, that’s not how it works. In fact, a U.S. court recently ruled that YouTube wasn’t a public forum, meaning it didn’t have to guarantee users’ rights to free speech. That sets a precedent for other social platforms as well, Twitter included.

Twitter for years has struggled to get more people to sign up and participate. But it has simultaneously worked against its own goal by not getting a handle on the abuse on its network. Instead, it’s testing new products — disappearing Stories, for example — that it hopes will encourage more engagement. In reality, better-enforced policies would do the trick. The addition of educational prompts in the Compose screen — similar those on Instagram that alerts users to content that will likely get reported or removed — are also well overdue.

It’s good that Twitter is expanding the language in its policy to be more encompassing. But its words need to be backed up with actions.

Twitter says its new rules are in place as of today.

from Social – TechCrunch https://ift.tt/3awPjgW

via IFTTT

0 comments:

Post a Comment