Submit campaign ads to fact checking, limit microtargeting, cap spending, observe silence periods, or at least warn users. These are the solutions Facebook employees put forward in an open letter pleading with CEO Mark Zuckerberg and company leadership to address misinformation in political ads.

The letter, obtained by the New York Times’ Mike Isaac, insists that “Free speech and paid speech are not the same thing . . . Our current policies on fact checking people in political office, or those running for office, are a threat to what FB stands for.” The letter was posted to Facebook’s internal collaboration forum a few weeks ago.

The sentiments echo what I called for in a TechCrunch opinion piece on October 13th calling on Facebook to ban political ads. Unfettered misinformation in political ads on Facebook lets politicians and their supporters spread inflammatory and inaccurate claims about their views and their rivals while racking up donations to buy more of these ads.

The social network can still offer freedom of expression to political campaigns on their own Facebook Pages while limiting the ability of the richest and most dishonest to pay to make their lies the loudest. We suggested that if Facebook won’t drop political ads, they should be fact checked and/or use an array of generic “vote for me” or “donate here” ad units that don’t allow accusations. We also criticized how microtargeting of communities vulnerable to misinformation and instant donation links make Facebook ads more dangerous than equivalent TV or radio spots.

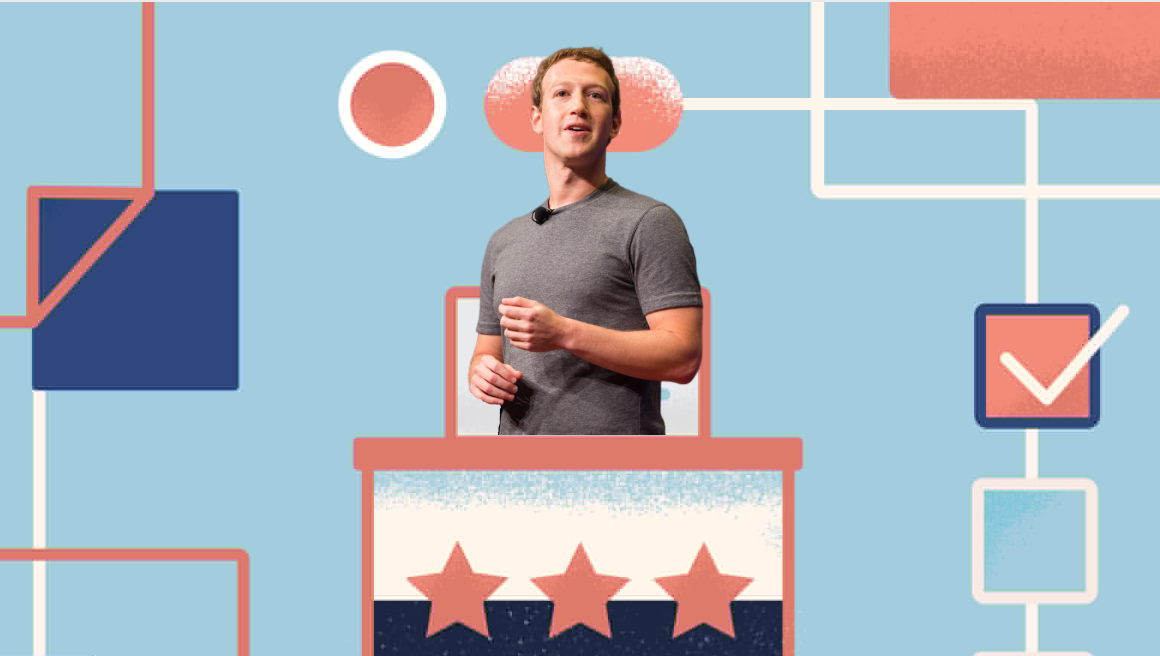

The Facebook CEO, Mark Zuckerberg, testified before the House Financial Services Committee on Wednesday October 23, 2019 Washington, D.C. (Photo by Aurora Samperio/NurPhoto via Getty Images)

Over 250 employees of Facebook’s 35,000 staffers have signed the letter, that declares “We strongly object to this policy as it stands. It doesn’t protect voices, but instead allows politicians to weaponize our platform by targeting people who believe that content posted by political figures is trustworthy.” It suggests the current policy undermines Facebook’s election integrity work, confuses users about where misinformation is allowed, and signals Facebook is happy to profit from lies.

The solutions suggested include:

- Don’t accept political ads unless they’re subject to third-party fact checks

- Use visual design to more strongly differentiate between political ads and organic non-ad posts

- Restrict microtargeting for political ads including the use of Custom Audiences since microtargeted hides ads from as much public scrutiny that Facebook claims keeps politicians honest

- Observe pre-election silence periods for political ads to limit the impact and scale of misinformation

- Limit ad spending per politician or candidate, with spending by them and their supporting political action committees combined

- Make it more visually clear to users that political ads aren’t fact-checked

A combination of these approaches could let Facebook stop short of banning political ads without allowing rampant misinformation or having to police individual claims.

Zuckerberg had stood resolute on the policy despite backlash from the press and lawmakers including Representative Alexandria Ocasio-Cortez (D-NY). She left him tongue-tied during a congressional testimony when she asked exactly what kinds of misinfo were allowed in ads.

But then Friday Facebook blocked an ad designed to test its limits by claiming Republican Lindsey Graham had voted for Ocasio-Cortez’s Green Deal he actually opposes. Facebook told Reuters it will fact-check PAC ads

One sensible approach for politicians’ ads would be for Facebook to ramp up fact-checking, starting with Presidential candidates until it has the resources to scan more. Those fact-checked as false should receive an interstitial warning blocking their content rather than just a “false” label. That could be paired with giving political ads a bigger disclaimer without making them too prominent looking in general and only allowing targeting by state.

Deciding on potential spending limits and silent periods would be more messy. Low limits could even the playing field and broad silent periods especially during voting periods could prevent voter suppression. Perhaps these specifics should be left to Facebook’s upcoming independent Oversight Board that acts as a supreme court for moderation decisions and policies.

Zuckerberg’s core argument for the policy is that over time, history bends towards more speech, not censorship. But that succumbs to utopic fallacy that assumes technology evenly advantages the honest and dishonest. In reality, sensational misinformation spreads much further and faster than level-headed truth. Microtargeted ads with thousands of variants undercut and overwhelm the democratic apparatus designed to punish liars, while partisan news outlets counter attempts to call them out.

Zuckerberg wants to avoid Facebook becoming the truth police. But as we and employees have put forward, there a progressive approaches to limiting misinformation if he’s willing to step back from his philosophical orthodoxy.

The full text of the letter from Facebook employees to leadership about political ads can be found below, via the New York Times:

We are proud to work here.

Facebook stands for people expressing their voice. Creating a place where we can debate, share different opinions, and express our views is what makes our app and technologies meaningful for people all over the world.

We are proud to work for a place that enables that expression, and we believe it is imperative to evolve as societies change. As Chris Cox said, “We know the effects of social media are not neutral, and its history has not yet been written.”

This is our company.

We’re reaching out to you, the leaders of this company, because we’re worried we’re on track to undo the great strides our product teams have made in integrity over the last two years. We work here because we care, because we know that even our smallest choices impact communities at an astounding scale. We want to raise our concerns before it’s too late.

Free speech and paid speech are not the same thing.

Misinformation affects us all. Our current policies on fact checking people in political office, or those running for office, are a threat to what FB stands for. We strongly object to this policy as it stands. It doesn’t protect voices, but instead allows politicians to weaponize our platform by targeting people who believe that content posted by political figures is trustworthy.

Allowing paid civic misinformation to run on the platform in its current state has the potential to:

— Increase distrust in our platform by allowing similar paid and organic content to sit side-by-side — some with third-party fact-checking and some without. Additionally, it communicates that we are OK profiting from deliberate misinformation campaigns by those in or seeking positions of power.

— Undo integrity product work. Currently, integrity teams are working hard to give users more context on the content they see, demote violating content, and more. For the Election 2020 Lockdown, these teams made hard choices on what to support and what not to support, and this policy will undo much of that work by undermining trust in the platform. And after the 2020 Lockdown, this policy has the potential to continue to cause harm in coming elections around the world.

Proposals for improvement

Our goal is to bring awareness to our leadership that a large part of the employee body does not agree with this policy. We want to work with our leadership to develop better solutions that both protect our business and the people who use our products. We know this work is nuanced, but there are many things we can do short of eliminating political ads altogether.

These suggestions are all focused on ad-related content, not organic.

1. Hold political ads to the same standard as other ads.

a. Misinformation shared by political advertisers has an outsized detrimental impact on our community. We should not accept money for political ads without applying the standards that our other ads have to follow.

2. Stronger visual design treatment for political ads.

a. People have trouble distinguishing political ads from organic posts. We should apply a stronger design treatment to political ads that makes it easier for people to establish context.

3. Restrict targeting for political ads.

a. Currently, politicians and political campaigns can use our advanced targeting tools, such as Custom Audiences. It is common for political advertisers to upload voter rolls (which are publicly available in order to reach voters) and then use behavioral tracking tools (such as the FB pixel) and ad engagement to refine ads further. The risk with allowing this is that it’s hard for people in the electorate to participate in the “public scrutiny” that we’re saying comes along with political speech. These ads are often so micro-targeted that the conversations on our platforms are much more siloed than on other platforms. Currently we restrict targeting for housing and education and credit verticals due to a history of discrimination. We should extend similar restrictions to political advertising.

4. Broader observance of the election silence periods

a. Observe election silence in compliance with local laws and regulations. Explore a self-imposed election silence for all elections around the world to act in good faith and as good citizens.

5. Spend caps for individual politicians, regardless of source

a. FB has stated that one of the benefits of running political ads is to help more voices get heard. However, high-profile politicians can out-spend new voices and drown out the competition. To solve for this, if you have a PAC and a politician both running ads, there would be a limit that would apply to both together, rather than to each advertiser individually.

6. Clearer policies for political ads

a. If FB does not change the policies for political ads, we need to update the way they are displayed. For consumers and advertisers, it’s not immediately clear that political ads are exempt from the fact-checking that other ads go through. It should be easily understood by anyone that our advertising policies about misinformation don’t apply to original political content or ads, especially since political misinformation is more destructive than other types of misinformation.

Therefore, the section of the policies should be moved from “prohibited content” (which is not allowed at all) to “restricted content” (which is allowed with restrictions).

We want to have this conversation in an open dialog because we want to see actual change.

We are proud of the work that the integrity teams have done, and we don’t want to see that undermined by policy. Over the coming months, we’ll continue this conversation, and we look forward to working towards solutions together.

This is still our company.

from Social – TechCrunch https://ift.tt/336qc16

via IFTTT

0 comments:

Post a Comment